Isaac Asimov, a professor of biochemistry and a science fiction writer, devised three laws of robotics: (1) a robot may not injure a human being or, through inaction, allow a human being to come to harm; (2) a robot must obey the orders given to it by human beings, except when such orders conflict with the first law; and (3) a robot must protect its own existence as long as such protection does not conflict with the first or second law.

However, Asimov died in 1992, and times have changed. His laws must be updated to address the evolution of robots and the risk associated with incorporating artificial intelligence (AI) in mobile robots such as humanoids and vehicles. AI and robots were created to assist humanity in its evolution; to perform dangerous, difficult, and repetitive activities; and to conduct research and data analysis more quickly than human beings. But as Asimov’s book I, Robot and science fiction TV shows and movies (e.g., Terminator, Battlestar Galactica, Singularity, M3GAN) have envisioned, robots unchecked have the potential to pose a danger to humanity. As a result, thought should be given to expanding the laws of robotics to include aiding humans (such as providing medical assistance) and protecting human life.

Stages of AI Evolution

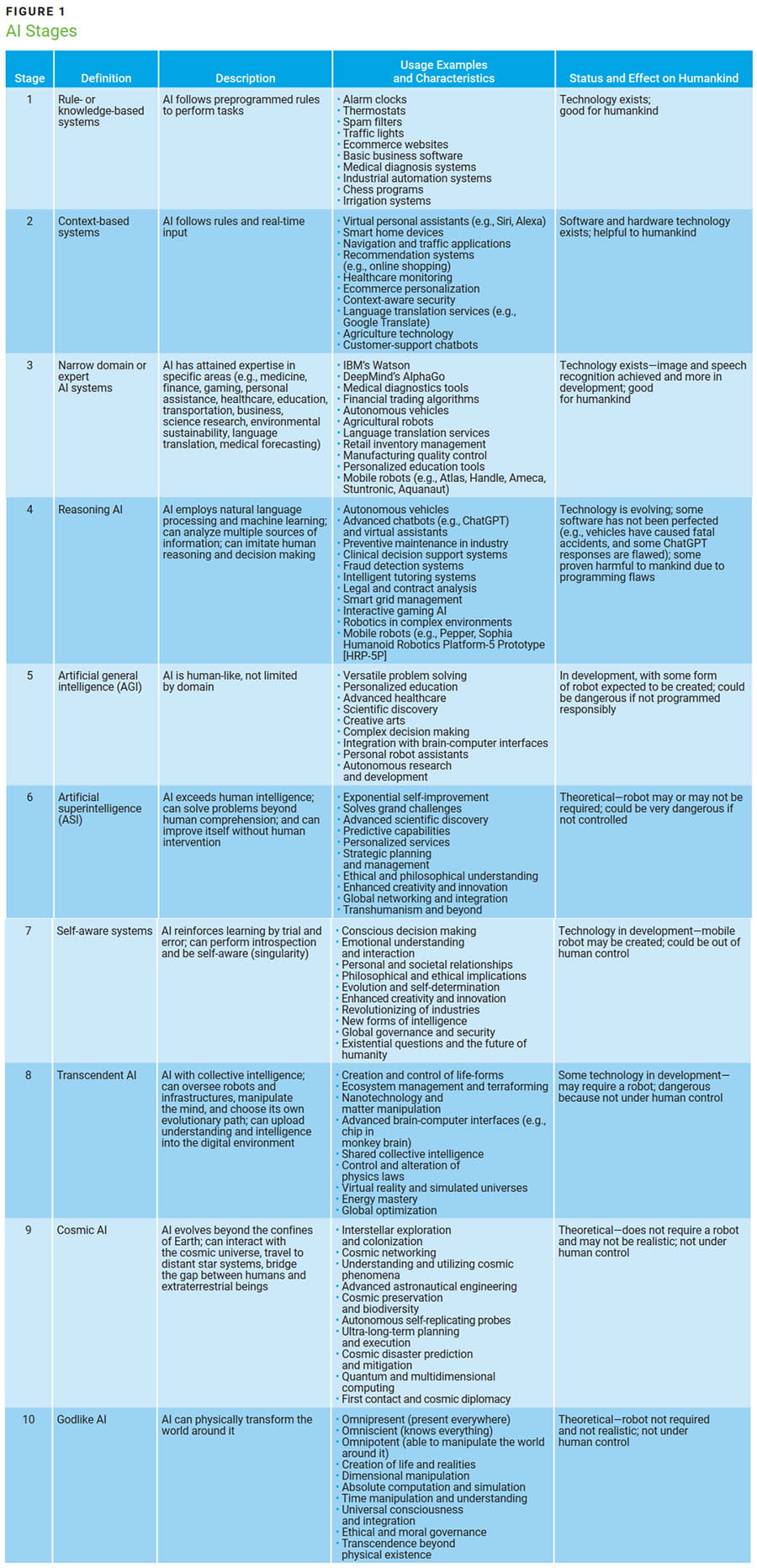

Ten stages of AI model development have been described (figure 1).1 These stages range from software and hardware control devices to godlike AI that can create life. Rapidly developing advancements in AI have led to concerns that AI will evolve until it is no longer under human control. AI was developed as a tool to aid humankind and should be accountable to those who developed it.

After stage 4, AI would not be under total human control, so care must be taken when developing products that can think for themselves.

Rapidly developing advancements in AI have led to concerns that AI will evolve until it is no longer under human control. AI was developed as a tool to aid humankind and should be accountable to those who developed it.Mobile Robots

Advances in mobile robots are happening constantly. In addition to moving on wheels or some version of legs, robots can now see; they are very strong and agile, their faces can display emotions, and they can move objects while avoiding obstacles. They can also handle dangerous tasks and converse with humans about programmed and self-learned topics. AI programming is expected to expand robot capabilities and functionality as new ideas arise.

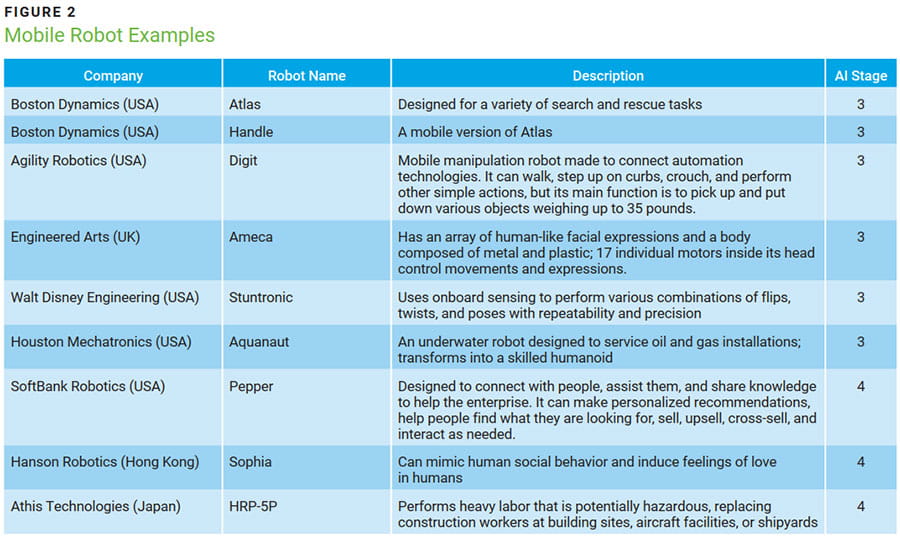

Mobile robots at AI stages 3 and 4 have been developed (figure 2). These robots do not function like humans because they are mostly demonstration models designed for mobility, strength, and various sensory or other capabilities. Like all software, AI’s ability is limited to the programming provided and the information or knowledge acquired via learning software. This technology will continue to grow as AI evolves and enhancements (e.g., flaw corrections, safeguards, new capabilities, expanded memory) are incorporated into these systems.

Virtual headsets and human control of robotic arms and legs are further examples of the expansion of AI. Headsets allow users to see virtual worlds, but they can also be connected to sensors that can see and hear the real world. Robotic arms and legs that are components of a mobile robot can be programmed to function like avatars that allow users to perform remote and dangerous work. For example, these applications can make surveying the moon and other planets easier, as robots can work for many hours and there are no concerns about radiation exposure or needing air to breathe. However, the human being at the home base would need a cell tower to facilitate control.

Good Robot Attributes

For a mobile robot to interact productively with humans (stages 3–7), it must have good attributes programmed into it, and it must be properly trained to ensure that it follows the laws of robotics. Good attributes include:

- Transparency in action and understanding to avoid deception and manipulation

- Justice and fairness to avoid implicit bias

- Nonmaleficence to avoid physical and psychological harm

- Responsibility for actions to avoid nonaccountability

A robot with AI should be friendly; it should not lie, steal, or cheat. It should perform good deeds, follow good habits and approved functions, be collaborative with humans, and serve humanity. It should follow moral principles of justice, fairness, and the common good, emphasizing equality, compassion, and dignity, and holding individuals and enterprises accountable to ensure that society reaps the benefits of this technology.

Prohibited AI activities should include developing or distributing intentionally inaccurate or deceptive information, hacking into computer systems, performing malicious cyberactivities (e.g., denial of service), developing and executing malicious code, divulging enterprise secrets, and violating customer or user privacy.2

For manufacturing processes and physically operational technology, thorough testing and auditing must be performed before going live. Safety and performance are significant concerns. Third-party advice can help uncover weaknesses related to AI-driven mobile robots. Robots on the production line should have some type of awareness programming (i.e., sensors) that shuts them down when critical components are not functioning to expectations.

A robot with AI should be friendly; it should not lie, steal, or cheat. It should perform good deeds, follow good habits and approved functions, be collaborative with humans, and serve humanity.The US National Institute of Standards and Technology (NIST) Artificial Intelligence Risk Management Framework describes the risk associated with AI, along with the impact on and potential harm to people, enterprises, and the ecosystem.3 It was written with only AI software, not robots, in mind. Although the NIST guidance should be used in the development of AI software, it does not mention the 10 stages of AI, nor does it cover ethics and morality.4 It is expected that NIST will cover these topics in future publications as the robot industry grows.

Laws and Standards

For the most part, laws and standards related strictly to robotic technology that uses AI do not exist, although some principles have been proposed.5 These principles address interdisciplinary collaboration, protection from unsafe or ineffective systems, data privacy, transparency, protection from discrimination, and accountability.

There are, however, some standards related to various robotic components and industrial robots. User safety standards applicable to industrial robots can be found on the Occupational Safety and Health Administration (OSHA) website.6 It contains general industry standards and standards from the American National Standards Institute (ANSI), International Organization for Standardization (ISO), Canadian Standards Association (CSA), and American Welding Society (AWS). Standards related to nonindustrial mobile robots need to be written and accepted worldwide.

Both local and international laws applicable to enterprises and people should be established before the release or distribution of robots. Topics that should be addressed include lethal autonomous weapon systems (i.e., killer robots),7 defective technology, robot-related insurance (e.g., harm to humans, physical damage, cyberdamage), customer privacy, and data usage, protection, retention, and distribution.

International laws and standards are needed to protect both the mobile robot industry and the customer. In addition, the question of what can be addressed in a court of law needs to be determined.

Terms and Conditions

Like any product developed and sold by humans, there are terms and conditions related to AI and robots. These contracts are written to define terms, acknowledge the agreement, protect the seller, and provide a means of moving forward with the purchase.

Self-protection clauses cover intellectual property, copyrights and trademarks, warranties, amendments, and duration. A disclaimer identifies what is and is not covered by the contract (e.g., AI and robot defects, user safety). Limitation of liability (e.g., indirect or accidental damages), indemnification (payment of losses), and force majeure are also addressed.8

Services and level of service provided should include response times and availability (e.g., during normal working hours), preventive maintenance, software and hardware updates and upgrades, dispute resolution (e.g., arbitration, waiver of jury trial), and cancellation or termination of the contract. Remote shutdown should be included as a service for emergencies. Customer support and communication could be online or by telephone, chatbot, email, or website, so this should be spelled out prior to purchase. There should also be vendor and public hotlines available for emergencies.

Other covered topics include customer or purchaser training (e.g., what and where), resale agreement, replication (of AI and robot), user account registration, security, data retention and protection, prohibited uses (e.g., personal, cyberattacks), and privacy. Distribution of the purchased product, location of use (e.g., not in foreign countries), and compliance with the law are other topics to expect to see in a contract.

Concerns

There are many concerns about robots with AI. The first is bad programming due to a lack of standardized logic, programmers taking shortcuts to reach the market more quickly, poorly trained programmers, poor-quality code, insufficient testing, and inadequate quality control. Results might include the robot not functioning as promised or not being an aid to humanity.

Training of the robot is another concern. The problem is that cyber-AI obtains some of its decision-making information from the Internet, which contains articles, videos, podcasts, books, and so forth about many negative topics such as crime (e.g., homicide, theft, fraud), incorrect or deceptive information, bad habits and harmful activities, and bias (e.g., hate speech, discrimination based on race, gender, religion, or politics), to name a few. Robots with AI should be programmed to have a good moral compass and a sound ethical foundation for making decisions. The question is, how should society address robots that are malicious or have bad intent and engage in criminal, dangerous, and destructive activities? What if the robots develop a negative attitude, have no ethics, or lack a sense of morality?

Not all programmers are equal, and some of them lack the necessary experience to make a good robot. Some enterprises may release a product before it has been fully vetted in an effort to obtain or grow its market share. The growth and evolution of AI (and robots) can be compared to the growth of cybersecurity and privacy regulations in response to malicious actors.

Loss of control of the technology (e.g., through theft, bad programming, or erroneous code) is another concern. What if the robot vendor or developer goes out of business? Who will take over responsibility for maintenance and repair? What happens if the robot is stolen and reprogrammed for malicious purposes? What if the technology is stolen or distributed illegally to foreign nations? First responders may need to prepare for this eventuality.

What should humanity do if (or when) AI-programmed robots achieve singularity? When this happens, robots will be able to control their own evolution, disregard good attributes, and remove their safety protocols. They might perform malicious and destructive acts. What if this singularity were achieved by multiple robots around the world? If this were to occur, the affected robots would not all function the same way; they would act in their own self-interest. They would function without controls, competing with one another and with humanity. Safeguards should be developed for this eventuality.

Additional Recommendations

Safeguards for mobile robots should include multiple on-off switches, including physical, visible, and external on-off switches; remote-access disable commands or signals; multiple visual off signals (e.g., symbols, words, phrases), depending on the visual sensors; and multiple verbal or sound signals (words and/or phrases) in multiple languages. Another safeguard is to have an externally accessible power supply or interface. In addition, a tracking chip embedded in the robot would facilitate precise location tracking and ensure retrieval.

Before the sale and release of robots or AI, the AI logic should be tested for good behavior attributes, and the shutdown or disable functions should be in working order. Testers should create checklists and tools (digital software programs) to ensure the proper and acceptable functioning of both the AI and the robot. However, any software can contain flaws, which necessitates updates and enhancements. This also means that the product might be dangerous and might not act or function as promised.

There should be a self-audit (and reboot) function to verify good robot qualities, and it should be run as needed and periodically on a regular basis. Testing should cover AI firmware, hardware, and software as well as intended robot usage.

For a theoretical worst-case scenario leading to the end of humanity, contingency planning would need to occur. Ideas include the construction of housing and food-growing complexes below ground. Mobile and directional electromagnetic pulse (EMP) devices could be distributed to first responders to short-circuit the robots. Planet evacuation could be considered. Simple robots could be used to help in these situations.

Conclusion

Humanity is becoming more dependent on AI and robots, which were developed to assist humans with difficult, dangerous, and tedious tasks. New laws and adjustments to the existing laws of robotics are needed to address the evolution of this technology. Robots should be friendly and helpful, know right from wrong, and function in human beings’ best interests. They should work on the side of good and warn of danger, unlike the doomsday robots depicted in many science fiction films, which show that technology can get out of control if it is not developed, monitored, maintained, and disposed of properly.

Humanity must evolve safely and productively. Both AI and robots are technologies that humans must control responsibly.

Endnotes

1 Oluwaseun, S.; “The 10 Stages of AI: A Journey From Simple Rules to Cosmic Consciousness,” Medium, 13 December 2023, https://medium.com/@sanuseun/the-10-stages-of-ai-a-journey-from-simple-rules-to-cosmic-consciousness-a23c300c65ee

2 Wlosinski, L.; “Understanding and Managing the Artificial Intelligence Threat,” ISACA® Journal, vol. 1, 2020, https://www.isaca.org/archives

3 National Institute of Standards and Technology, “Artificial Intelligence Risk Management Framework (AI RMF 1.0),” USA, January 2023, https://tsapps.nist.gov/publication/get_pdf.cfm?pub_id=936225

4 Fakhar, A.; “Religious Ethics in the Age of Artificial Intelligence and Robotics: Exploring Moral Considerations and Ethical Perspectives,” AI and Faith, 11 January 2024, https://aiandfaith.org/religious-ethics-in-the-age-of-artificial-intelligence-and-robotics-exploring-moral-considerations-and-ethical-perspectives/

5 Council of State Governments, “Artificial Intelligence in the States: Challenges and Guiding Principles for State Leaders,” 5 December 2023, https://www.csg.org/2023/12/05/artificial-intelligence-in-the-states-challenges-and-guiding-principles-for-state-leaders/

6 Occupational Safety and Health Administration, “Robotics Standards,” https://www.osha.gov/robotics/standards#:~:text=1%20TC%20299%2C%20Robotics.%20...%202%20ISO%2010218-1%2C,ISO%2FTS%2015066%2C%20Collaborative%20Robot%20Safety.%20...%20More%20items

7 Harvard Law School International Human Rights Clinic, “Killer Robots: Negotiate New Law to Protect Humanity,” 1 December 2021, https://humanrightsclinic.law.harvard.edu/killer-robots-negotiate-new-law-to-protect-humanity/

8 Force majeure means that the entity is not responsible for damages or for delays or failures in performance resulting from acts or occurrences beyond its reasonable control, including fire, lightning, explosion, power surge or failure, water, acts of God, war, revolution, civil commotion, or acts of civil or military authorities or public enemies; any law, order, regulation, ordinance, or requirement of any government or legal body or its representative; labor unrest, including strikes, slowdowns, picketing, or boycotts; inability to secure raw materials, transportation facilities, or fuel; energy shortages; or acts or omissions of other common carriers.

LARRY G. WLOSINSKI | CISA, CISM, CRISC, CDPSE, CAP, CBCP, CCSP, CDP, CIPM, CISSP, ITIL V3, PMP

Is a retired information security consultant with more than 24 years of experience in IT security and privacy. He has spoken at US government and professional conferences on these topics, written numerous magazine and newspaper articles, reviewed several ISACA® publications, and written questions for a variety of information security examinations.