Quantum computing progress will alter the dependence on current classical cryptography (CC) to safeguard data and network transmissions. While some organizations are already preparing for “Years to Quantum” (Y2Q)—when quantum computers will be able to reasonably break CC—by updating systems to quantum-safe/post-quantum cryptography (PQC), many are not aware of the full gamut of emerging risk. The likelihood of risk factors such as impersonation or forgery of digitally signed documents, messages, and executables coming to fruition will increase. It is essential to recognize that the quantum threat extends far beyond a simple upgrade task. Leaders must ensure that staff at all levels are educated about the risk posed by this technology. Early awareness is crucial to identifying gaps and planning necessary actions across the organization.

Understanding the Quantum Threat

Today, CC enables secure and trustworthy communications (data in transit) and protects data stored online, locally, and on removable media (data at rest). The premise of CC is that a classical computer (e.g., typical PC, smartphone, etc.) cannot efficiently use a shortcut or brute-force strategy to derive a decryption key. Generally, in the absence of quantum computing, one could reasonably assume data protected by modern CC would remain safe for years, if not decades, even with advances in distributed processing and faster processors.

Quantum computing, on the other hand, is not simply an incremental improvement in classical computing. Instead, quantum computing relies on a completely different physical implementation and means of processing via quantum mechanics (not limited to 1s and 0s). One can appreciate the bottom line without a physics Ph.D.: The math needed to attack CC, such as factoring prime numbers, is performed differently and can be completed exponentially faster with a quantum computer. The quantum computer is not simply doing the same thing faster. The classical computer is playing checkers while the quantum computer is playing chess. Although quantum computers are currently neither powerful nor reliable enough to circumvent CC, it is imperative to plan and make changes to address emerging risk. As big tech, universities, and nation-states devote increasing amounts of research and development to quantum, the day when CC is considered broken is rapidly approaching.1

Awareness of Quantum Computing and Y2Q

Quantum computing is increasingly grabbing headlines, but it is frequently perceived as something that is “just 10 years away.” However, with simple quantum supremacy claimed in 2019 by NASA and Google,2 it is not considered science fiction anymore. Governments have served as early adopters taking notable action in the field of quantum technology, for example, by enacting the 2018 US National Quantum Initiative Act.3 Today, guidance and migration planning are available from the US National Security Agency (NSA),4 the European Union,5 and other national bodies. While governments may see potential benefits from quantum, the key driver for quantum research is national security. Instead of Y2Q, the day when CC is rendered obsolete is known as Q-Day (a play on the WWII D-Day offensive) in intelligence and military circles due to its massive potential security impact: Any nation able to leverage quantum computing to break CC will be able to intercept and manipulate communications in a manner that would be very difficult to detect. Not only would this enable spying on what was thought to be securely encrypted information, but a nation state could conduct more convincing disinformation campaigns and sow seeds of doubt if they were able to use a quantum attack to derive some official private key to cryptographically sign a message or document. On the military side, a quantum advantage would be a giant leap forward both offensively and defensively. Nation states that upgrade to PQC will be less vulnerable, and those that invest in encryption-breaking capabilities could potentially intercept or modify communications to take over live networked battlefield systems.6 Looking beyond government, financial institutions are also early adopters of quantum technology due to the potential nanosecond advantages (modelling quicker, computing and transmitting trades before competitors7 ) and the critical importance of maintaining a secure, trustworthy modern digital financial system. For example, JP Morgan is already publicizing its defensive and research efforts.8 In other industries, the quantum threat is not on the agenda, with few organizations devoting any amount of their budget to the issue. A research survey conducted by CSIRO in 20239 highlighted only a high-level, limited degree of awareness around Y2Q risk. In many organizations where secrecy and data confidentiality are not prioritized in the C-suite, the benefits and the potential risk of quantum computing are not yet receiving proper attention.

Preparing for Y2Q also means considering and elevating risk around forgeries and impersonation, changing processes, and assessing how organizations interact and evaluate third parties and partners.Preparing for Y2Q Requires More Than Upgrades

Unlike Y2K, there is no specific date associated with Y2Q, among other differences. For many organizations, immediate, visible issues impede Y2Q from receiving appropriate attention. Unfortunately, Y2Q will likely come sooner than many anticipate as the pace of research accelerates. Furthermore, beating the clock alone is insufficient due to the threat of harvest now, decrypt later (HNDL). Data storage will only continue to become cheaper over time. Most long-term, useful information requiring encryption requires text data, which does not take up much storage space relative to its value. While some adversaries may need to be selective, well-funded nation-states or criminal enterprises can intercept vast amounts of data now and wait for Q-Day. In 2013, it was reported that a single NSA data center could hold more than five zettabytes of data (the equivalent of approximately 250 billion DVDs).10

Most published research on the quantum threat narrowly focuses on the upgrade or migration aspects. While PQC migration efforts are important, the HNDL aspect introduces non-migration risk. Preparing for Y2Q also means considering and elevating risk around forgeries and impersonation, changing processes, and assessing how organizations interact and evaluate third parties and partners.

Upgrade and Migration Risk

While not the only concern associated with quantum, the upgrade and migration requirements are considerable. Although PQC algorithms and commercial solutions based on well-established mathematical concepts such as lattices already exist, actual implementations are still in their infancy and have yet to be tested to the same degree as current CC. For example, in 2022, the US National Institute of Standards and Technology (NIST) announced a set of PQC algorithms;11 however, researchers found that side-channel attacks may be possible in certain implementations of one of the candidates.12

Therefore, early adopters should be prepared for additional migrations by ensuring that their migration plans are repeatable, implementations are modular, and all plans, including data mapping and management repositories, are maintained as living documents instead of one-off projects.

Furthermore, these migrations involve more than just upgrades to protocols and algorithms. They must be understood as extract, transform, load (ETL) projects because migrations typically require decryption before reencrypting with PQC. Poorly planned ETL processes result in temporary/intermediary data being left undestroyed, unprotected, and easily accessible to attackers. It is crucial to know where data flows and where it is replicated as it transitions between systems. Moreover, the HNDL threat necessitates additional security measures that will further complicate said processes. If the encryption key for the new PQC system is transmitted through standard Transport Layer Security (TLS) implementations that use CC, or if the key is stored in a credential or secrets management tool that does not utilize PQC, it could potentially be retrieved and subjected to attacks.

Data Backups, Logs, and Data Retention

A naive assessment of HNDL focuses solely on unauthorized, malicious interception and eavesdropping. However, a careful approach will consider that data is replicated in many places for legitimate and useful reasons. For example, modern applications are known for verbose logging of every interaction, which may contain personal or enterprise data requiring high confidentiality. Although the best practice is to avoid logging sensitive data altogether, in some scenarios, redaction, encryption, or hashing may be employed as a logging strategy. Historical logs may be retained in multiple locations, ingested downstream into other systems, or sent to partners, among other possibilities. Therefore, data architects must map and understand all these downstream destinations to minimize them and plan for required mutual changes for PQC.

In addition to logs, backup sets are another destination for long-term data storage. Backups may include files, databases, or entire systems must consider what backups are truly needed and how to manage the reencryption and destruction of using various forms of encryption managed by the application and/or the backup solution itself. Furthermore, enterprise backup solutions often call for online distributed storage in multiple clouds and utilize multiple offline, offsite locations. Many organizational policies or external requirements mandate long-term backup retention. Organizations old data across all systems and locations.

More generally, when thinking about the postquantum problem, organizations should review their data retention policies and practices. When possible, it is far easier to fully destroy and stop collecting data in all forms rather than dedicating resources to ETL/reencryption projects. If it is not feasible to delete certain data or decrease record retention periods, compensating measures, such as moving more data offline into physically secure locations, should be considered.

Beyond core applications and databases, it is critical for enterprises to address data:

- Backups—Because of the HNDL risk, enterprises must either upgrade and replace old backups or wrap old backups in PQC.

- Logs—Logs may include personal data encrypted with unsafe CC; enterprises must determine how to treat old logs if they are still useful.

- File transfer—Whether automated or person-to-person, communications may be vulnerable; enterprises must identify existing tools and migrate end users and partners to quantum-safe technologies.

- External messages—Enterprises must identify where software, messages, contracts, or other documents posted publicly or shared peer-to-peer are cryptographically signed; reissue signatures with PQC; and be aware that a quantum attack can lead to forgeries on signatures that used vulnerable CC.

- Security hardware—If security hardware used for signing or authentication is vulnerable, it may need to be replaced and could also require software replacement.

Publicly Posted Keys, Certificates, and Signed Messages

An often-overlooked Y2Q risk area is external communications and publicly posted messages. Securing what is private and under organizational control is relatively obvious and simple compared to addressing data residing with partners or on the Internet. To understand this risk, consider what it means to break cryptography. While one approach is to analyze the encrypted content, typically a better path is to steal or derive the actual decryption key. Most encrypted communications rely on public-key cryptography (asymmetric cryptography); even when shared-key cryptography (symmetric cryptography) is used, the shared key is typically distributed using public-key cryptography to prevent interception. The premise of public-key cryptography is that an organization can share its public key, as long as it employs strong operational security measures with the corresponding private key; organizational possession of the private key is what underlies the trust mechanism. An attacker possessing a private key is extremely dangerous because many systems do not implement additional controls to prevent or identify forgeries and impersonations without the victimized keyholder explicitly revoking the key. The ability of a quantum computer to derive a private key efficiently (in terms of time and resources) from a public key is the essence of Y2Q, since public-key cryptography requires the public key to be shared and exposed.

In a world plagued by AI deepfakes, it is important that PQC is used to preserve integrity and authenticity for the future.Today, there are numerous publicly accessible repositories where an individual or an organization can post their public key(s).13 Furthermore, a public key is simply a string of text one can post wherever they think it makes sense and will be reasonably tamper-proof. For example, a security team may include a public key in its security.txt file14 available on the organization’s main website to allow more secure reporting for their disclosure/ bug bounty program. Given the ubiquity and ease of obtaining a public key, an attacker could use a quantum computer to derive the corresponding CC private key to enhance the effectiveness of a phishing attempt. For example, a phishing attempt from a trusted contact’s “new” email address could claim authenticity because the content is properly encrypted with the private key that only the real owner should possess. Note that not all public keys are Internet-exposed. If an organization collaborates with partners to share messages and files, public keys may be exchanged directly. A malicious partner could use a quantum computer to derive the private key used and then change historical documents, agreements, etc., which are trusted because they were appropriately cryptographically signed at the time. Because common standards (e.g., IETF RFC-316115) for cryptographic timestamps rely on CC public-private keypairs, even the timestamp from a compliant timestamp authority could be faked, lending further credibility to any impersonation.

Looking more broadly, consider trust expectations for historical publicly available information, such as that preserved from Internet forums and newsgroups where people signed their messages. Typically, the signature and public key are included in these messages. The purpose of such a signature is to prevent tampering when replicated or archived elsewhere. Without the private key, altering the message and retaining the valid digital signature would not be feasible. However, with the ability to easily derive the private key from the public key, a malicious actor can now change the past. Never forget George Orwell’s 1984: "Who controls the past controls the future.”16 In a world plagued by AI deepfakes, it is important that PQC is used to preserve integrity and authenticity for the future.

Software Supply Chain/Distribution

Modern software is not developed in a vacuum and frequently utilizes third-party code libraries. Software supply chain risk occurs when using outdated, insecure, or forged versions of a third-party library or dependency. The risk is present in all software, whether commercial-off-the-shelf (COTS) or written internally. Cryptographic signatures help to ensure that only authentic software is utilized.17 Again, this assurance relies on adequately securing the software developer and/or distributor's private key. Forging the authenticity of a signed library package is essentially the same process as addressed in the message integrity discussion. Unfortunately, addressing the risk is an ecosystem problem requiring existing software to be resigned with QC. This may necessitate upgrades to the various distribution and management systems used by authors and downstream developers. Moreover, when dealing with self-published libraries that exist outside of centralized package management tools, developers might neglect essential upgrades, which could result in additional internal security overhead to compensate.

Due diligence will require going far beyond communicating about system downtime for upgrades. Leadership and staff should be educated starting today, even in the absence of actionable Y2Q plans due to the HNDL factor.In addition, executable and finalized software packages also rely on signed verification. This will typically require changes from operating system and app store providers to handle the change; however, users of non-device operating systems still commonly utilize direct downloading for installation. This would require individual software publishers to make updates and issue newly signed executables where they host their software. The risk is more pronounced in organizations that depend on legacy and long-term support environments. Consider this scenario: A control mandates the use of signed software on an industrial control system. However, if that legacy-certified environment runs an unsupported operating system such as Windows 7, there may be no official mechanism available to support the use of PQC-signed software. Even if operating in “island mode” (an isolated system with no Internet access nor connection to the main enterprise network), such a system may now require additional compensating controls to meet requirements. More broadly, older device-class hardware such as phones and tablets may not receive updates, requiring risk acceptance or costly replacements long before their expected/useful end-of-life.

Security Hardware

Hardware security devices such as Trusted Platform Modules (TPMs) and hardware security modules (HSMs) continue to see growing operational usage. While commodity systems can often be upgraded, many HSMs and other specialized security devices may face certification issues, requiring replacement.

In addition, multifactor authentication (MFA) technology, such as smart cards and hardware tokens, may not support upgrades. Such equipment will require secure destruction, as well as software and operating system changes for replacements. While it is non-trivial to extract private keys from these devices, public keys are often exportable or may exist in multiple places online.

Awareness Is Key

Organizations must consider how Y2Q will impact their daily tasks and where unexpected changes may pop up. Due diligence will require going far beyond communicating about system downtime for upgrades. Leadership and staff should be educated starting today, even in the absence of actionable Y2Q plans due to the HNDL factor. While initial pushback may claim that Y2Q is solely a tech issue, there are compliance impacts (e.g., the EU General Data Protection Regulation [GDPR]18 requires appropriate safety of personal data and transparent processing and decision making), among other risk factors, that must be understood widely.

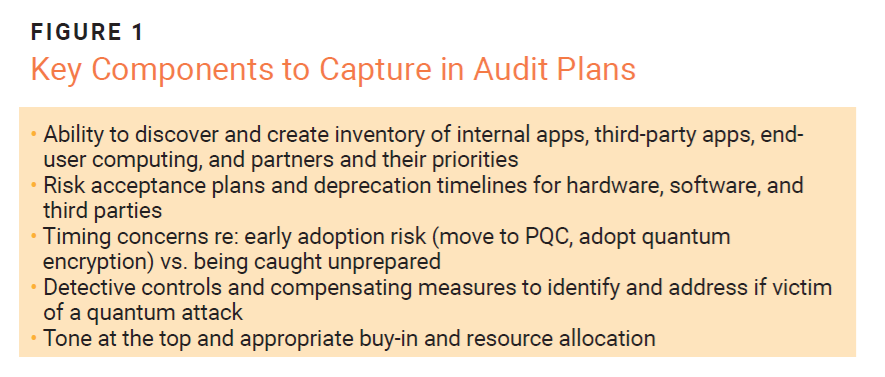

Obvious key target groups include engineering, IT, and security staff because they are the ones evaluating and implementing PQC solutions. Other major target groups are procurement and third-party risk professionals. These teams should understand organizational risk and must go beyond checking if the algorithm is on the approved list. They must think about actions and policies that need to be implemented today to minimize future HNDL exposures. In addition, audit, risk, and compliance functions must be well informed so that they can build new audit programs (figure 1) and ensure that enterprise risk registers reflect the various areas impacted by Y2Q. The organization must be prepared when customers, regulators, or board members ask: “What are we doing about quantum?”

Possible responses include:

- “We are requiring our third-party assessment teams to investigate quantum capabilities for all new partners and vendors, and it will be a key matter in re-reviews.”

- “We are mapping regulatory and compliance requirements around data processing to identify controls and limits for the usage of quantum for decision making and encryption.”

- “We are identifying and prioritizing applications, services, and vendors that can cause a severe service interruption or data event for our crown jewels from a quantum attack.”

- “We are developing a policy and communication plan to inform users at all levels about the risk from quantum and to surface areas of impact that may not be readily apparent.”

Increased reliance on outsourcing, cloud, and third parties means that an organization’s partners represent increased risk post-Y2Q. Note that modern integrations may involve multiple third parties, and a unified upgrade or migration may be a complex, slow process requiring changes in many places and possibly fourth-party involvement.

All employees need to understand where issues related to Y2Q may crop up in their day-to-day activities to ensure that there are sufficient resources to address such gaps. Consider the organization’s legal team or those involved in making deals. Are they aware that a contract or deal digitally signed without PQC may someday be modified in ways that would be difficult to detect? External file sharing is another common need, as users often turn to unauthorized shadow IT. It will become more important than ever for security teams to create, enforce, and train on paved roads for secure file sharing and messaging to prevent future quantum attacks. Security teams also need to determine where classical cryptography is being used independently in end user computing environments to upgrade encryption not under centralized control.

Cybersecurity Insurance

While there is a great deal of preventative work that an organization should conduct to prepare for Y2Q, it is important to consider risk transference as part of the plan. Throughout the past decade, there has been great growth in the maturity and availability of cybersecurity insurance. For many organizations, meeting the security and controls criteria of their insurers was an important driver for improving their security posture. As the quantum threat becomes better understood by insurers, changes in the cyberinsurance landscape should be expected.19 Some insurers may require new controls or other changes to continue to qualify, while others may establish separate policies or riders covering quantum-related events. On the other hand, some insurers may leave the market or exclude quantum-related events. In either case, quantum is poised to push up rates in the long term. Organizations must work with their insurers to ensure that they are on the same page regarding quantum computing. Enterprises must be prepared to renegotiate terms or seek coverage from a new provider. It is crucial to get cyberinsurance right, as it continues to mature from merely a good idea to a requirement for many organizations.

Conclusion

Preparing for Y2Q requires thorough planning and a comprehensive evaluation of potential risk. Organizations that view the quantum cliff as a mere upgrade issue will be the most at risk because the encrypted truth is already out there. Decisive action will need to be taken beyond simple system upgrades. Even with the best risk planning, contingency plans and procedures must be established to address the strong likelihood of data exposure or impersonation that can occur as the result of even a small mistake or gap in the migration process. Organizations must search throughout their operations for impacts on processes both large and small, and quantum must become a pervasive theme throughout risk registers.

Key awareness targets and messaging themes to distribute across the enterprise include:

- Engineers and Developers

- For all applications and databases, identify algorithms as risk requiring replacement.

- Identify third-party libraries and application programming interfaces (API)/interconnections that require upgrades.

- Identify legacy and unsupported libraries and specialized systems unlikely to be upgraded and make plans to address them.

- Identify security controls to ensure that old encryption and temporary files are fully destroyed.

- Ensure proper ordering of upgrades; upgrade secrets management systems to PQC first.

- Understand how code signing works in the software supply chain and how the communities and ecosystems for tools and dependencies plan to address Y2Q.

- Chief Information Security Officer (CISO) and Security Leadership

- Ensure organizational awareness that Y2Q will require behavioral and process changes beyond simple technical upgrades.

- Identify current and long term needs to bring in talent or train existing employees.

- Ensure that inventory of dependencies on vulnerable CC are mapped and responsibility for addressing is assigned.

- Understand the criticality of cyberinsurance to the organization and ensure that the organization is on the same page as insurance providers.

- Board Members, Regulators, Stakeholders

- Understand that Y2Q can change long term depreciation/cost expectations, i.e., something working perfectly well functionally may still need to be replaced to be safe.

- Third-party risk must be taken seriously requiring partner/vendor due diligence, which may require terminating relationships with third parties who put the organization at risk.

An enterprisewide understanding of—and preparation for—quantum will help keep risk at bay and ensure resilience.

Endnotes

1 Houston-Edwards, K.; “,” Scientific American, 1 February 2024

2 Tavares, F.; “Google and NASA Achieve Quantum Supremacy,” NASA, USA, 23 October 2019

3 National Quantum Coordination Office, “About the National Quantum Initiative,” USA

4 National Security Agency/Central Security Service, “NSA Releases Future Quantum- Resistant (QR) Algorithm Requirements for National Security Systems,” USA, 7 September 2022

5 European Commission, “Commission Publishes Recommendation on Post-Quantum Cryptography,” European Union, 11 April 2024

6 Swayne, M.; “NATO Sees Massive Military Advantages In Quantum Tech,” The Quantum Insider, 17 October 2022

7 Kaminska, I.; “How Traders Might Exploit Quantum Computing,” The Financial Times, 2021

8 JP Morgan, Global Technology Applied Research

9 Coates, R.; Baruwal-Chhetri, M.; et al.; “Risks of Quantum Computing to Cybersecurity: Perspectives From Experts and Professionals,” CSIRO, 2023

10 Kramer, M.; “The NSA Data: Where Does It Go?,” National Geographic, 12 June 2013

11 National Institute of Standards and Technology (NIST), “NIST Announces First Four Quantum- Resistant Cryptographic Algorithms,” USA, 5 July 2022

12 Dubrova, E.; Ngo, K.; et al.; “Breaking a Fifth-Order Masked Implementation of CRYSTALS-Kyber by Copy-Paste,” Royal Institute of Technology, 2022

13 E.g., https://keys.openpgp.org/

14 Radesky, S.; Kennelley, S.; “Security.txt: A Simple File with Big Value,” Cybersecurity and Infrastructure Security Agency, USA, 20 December 2023

15 Adams, C.; Cain, P.; et al.; “Internet X.509 Public Key Infrastructure Time-Stamp Protocol (TSP),” August 2001

16 Orwell, G.; 1984, Signet Classic, USA, 1961

17 Apache Maven Project, “Guide to Uploading Artifacts to the Central Repository,” 5 July 2024, Kuppusamy, K.; Diaz, V.; et al.; "PEP 458 – Secure PyPI Downloads With Signed Repository Metadata," 27 September 2013

18 Intersoft Consulting, “General Data Protection Regulation,"

19 Radanyi, R.; "Quantum Computing: The Cyber Insurer’s Next Challenge?," Moody's, 25 July 2023

ERIC H. GOLDMAN | CISA, SECURITY+

Is an information security professional with experience in financial services and retail. He specializes in the human aspects of information security, particularly in human-computer interaction. He can be reached at Eric.Goldman@owasp.org.