The proliferation of artificial intelligence (AI) technologies presents unprecedented business transformation and innovation opportunities. According to McKinsey’s The State of AI in 2023 report,1 the AI market is projected to reach a staggering US$407 billion by 2027, experiencing substantial growth from its estimated US$86.9 billion revenue in 2022. However, the widespread adoption of AI also brings forth multifaceted risk, including privacy breaches, data security vulnerabilities, and ethical dilemmas. The urgency of establishing robust AI governance mechanisms to manage this risk and ensure responsible AI utilization cannot be overstated. It is important to establish a robust AI governance framework, referred to here as a practical, robust implementation and sustainability model (PRISM), to foster digital trust in AI systems. PRISM embodies a set of core AI principles, mandates adherence to a responsible AI policy, utilizes impact assessments for risk evaluation, fortifies security and privacy defenses, and manages the AI product life cycle to maintain consistent responsibility and protection.

A well-defined RAI policy enables accountability within an organization by formalizing requirements that address use of off-the-shelf AI tools and self-developing AI-driven systems, thereby mitigating safety and ownership risk.The accelerated adoption of AI technologies in enterprises has prompted a critical need for effective governance frameworks to mitigate associated risk and ensure responsible and secure AI implementation.

AI Risk and Challenges Faced

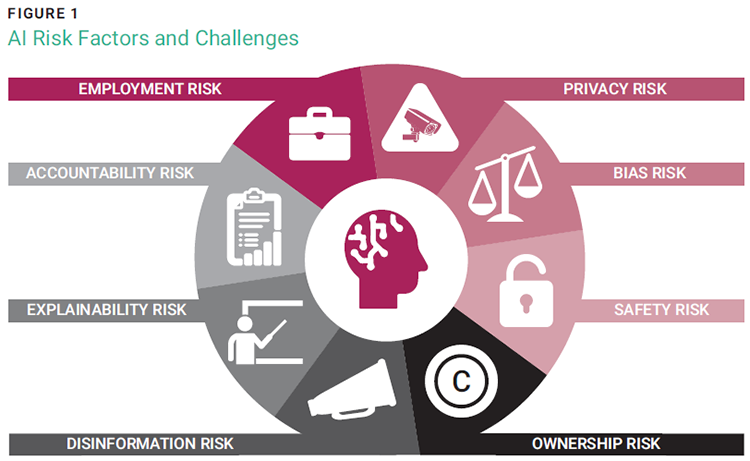

The latest trends in AI risk underscore the complexity and gravity of the challenges posed by unchecked AI implementation. Across industries, concerns regarding biased decision-making, opaque algorithmic processes, and potential threats to individual privacy have gained prominence. Among the detrimental impacts of unregulated AI practices has been algorithmic bias leading to discriminatory outcomes in recruitment processes.2 Similarly, the exposure of sensitive personal data3 due to inadequate security measures in AI systems has raised alarms about data breaches and privacy infringements. Figure 1 shows some of the risk factors and challenges faced by society and enterprises, which currently cannot be resolved by any single comprehensive solution. They include:

- Employment risk—Job redundancy after task automation; skill shifts; fear of job loss

- Privacy risk—Collection of large amounts of personal data; lack of user awareness and informed consent; insufficient deanonymization

- Bias risk—Algorithm bias; failure to eliminate offensive language and discriminatory data

- Safety risk—Deepfakes; convincing phishing emails

- Ownership risk—Plagiarism and copyright infringement via content generated by AI

- Disinformation risk—Generation of fake news stories to spread misinformation

- Explainability risk—Difficulty in understanding how complex AI models generate their output

- Accountability risk—Data quality and integrity issues resulting in AI providing inaccurate information

Components of a Secure AI Governance Framework

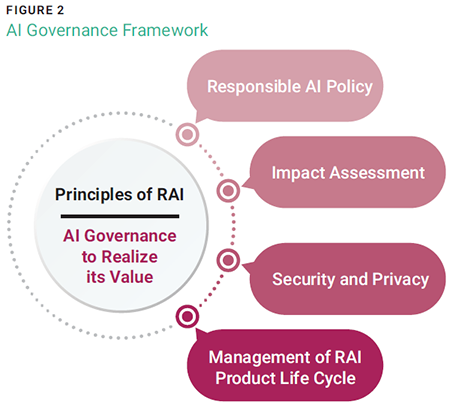

Effective governance structures, policies, and oversight mechanisms are essential to managing risk and ensuring responsible AI usage. Figure 2 depicts a proposed framework with key principles and components to guide enterprises to kickstart a reliable AI adoption journey.

Principles of AI

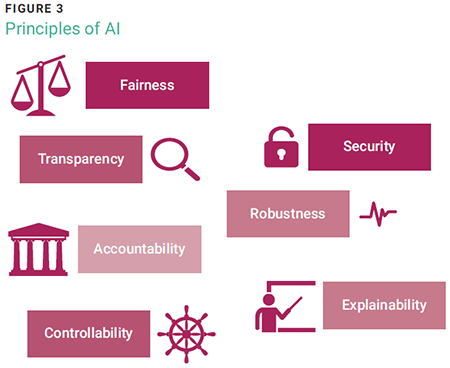

The foundational principles of AI serve as the cornerstone of a governance framework that promotes how AI should respect individual privacy rights and protect personal data. This includes adhering to all relevant data protection laws and using robust security measures to prevent unauthorized access. These principles are fair, accountable, and transparent, ensuring that they align with societal values and ethical norms. The principles (figure 3) include:

- Fairness—AI should be designed and used to treat all individuals and groups fairly. It should not create or reinforce bias or discrimination based on factors such as race, gender, age, or socioeconomic status.

- Accountability—Entities that develop and deploy AI should be accountable for its impacts. This includes implementing mechanisms for addressing any negative effects that may arise.

- Transparency and explainability—AI systems should be transparent. This means that it should be clear how they work, how they make decisions, and who is responsible for them.

- Controllability—AI should not undermine human autonomy or decision making. People should have the ability to understand and challenge decisions made by AI, and to opt out of AI decision making when appropriate.

- Robustness and security—Consideration should be given to data protection and the long-term impacts of AI, including its effects on jobs, skills, the environment, misuse, data breaches, and data privacy.

Together, these principles act as a guiding framework that informs the development of policies, the deployment of technologies, and the governance of AI systems, ensuring that AI serves humanity’s best interests and protecting against potential harm.

Responsible AI Policy

An overarching responsible AI (RAI) policy provides a reference point for identifying when teams and organizations deviate from desired actions. A well-defined RAI policy enables accountability within an organization by formalizing requirements that address use of off-the-shelf AI tools and self-developing AI-driven systems, thereby mitigating safety and ownership risk. Following fundamental RAI principles can be helpful to achieve enterprise-wide alignment.

Enterprises planning to use generative AI services for productivity improvement should include several key generative AI prompt engineering best-practice clauses in the RAI policy. For example, never input enterprise secrets, sensitive information, customer and employee information, personal information, passwords or secret keys, enterprise intellectual property, enterprise program source code, and business plans or strategies into public generative AI services. All AI-generated content should be reviewed (and validated if needed) before its use in the workplace is allowed. Special attention should be paid to detect any infringement of copyright or intellectual property within AI-generated content.

Impact Assessment

Impact Assessment

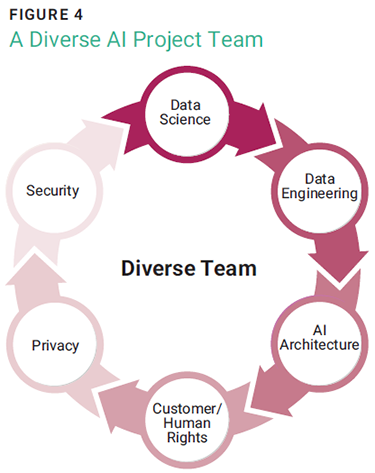

After developing the RAI policy, it is crucial to conduct an RAI impact assessment to determine which algorithms can be adopted and which cannot be adopted due to risk scoring against RAI principles. Having a diverse team during the RAI impact assessment and project is essential (figure 4). By bringing together individuals from different backgrounds and perspectives within the enterprise, a more holistic approach can be taken to address potential biases and ensure that AI is developed and used fairly and equitably. A lack of diversity in the development team could lead to unintended consequences and negative impacts.4 Therefore, it is essential to prioritize diversity and inclusivity in AI projects to ensure that all areas of the business are represented and accounted for. Author Laura Sartori referred to this as a sociotechnical approach.5

Several RAI assessment templates, such as the Microsoft Responsible AI Impact Assessment Template,6 the Demos Helsinki Non-discriminatory AI Assessment Framework,7 and the European Commission’s High-Level Expert Group on AI (HLEG-AI)-published Assessment List for Trustworthy Artificial Intelligence (ALTAI) tool,8 can serve as reference points to build a tailor-made enterprise AI assessment process.

The Importance of Automated and Recurring Assessments

AI assessments cannot be sustainable if they are not automated and recurring, given that AI is self-learning by nature. It is crucial to make continuous and/or automatic assessment a must to ensure that the self-learning (sometimes known as reinforcement-learning) data does not poison the model. Many tools, such as the Responsible AI Verify Open-Source Toolkits9 and Holistic AI Audits,10 are available in the OECD.AI technical tool catalog11 to help AI actors build and deploy trustworthy AI systems.

Security and Privacy of AI

Ensuring AI system security is a significant challenge because it is not the same as securing traditional IT systems. The security protection approach for AI must be more comprehensive to address the unique vulnerabilities and risk associated with AI technologies. Several risk factors are associated with AI systems, such as insufficient deanonymization, offensive language, discriminatory data, and model poisoning. Testing against the machine learning (ML) model is essential to detect adversarial inputs and filter them out properly, ensuring that the model does not generate false or unpredictable results. Cloud platforms such as Google Cloud Platform,12 Microsoft Azure,13 and Amazon Web Services14 provide tools to help automate the adversarial test. The security team is critical in AI initiatives and can contribute to AI threat modeling. MITRE Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) is a knowledge base of adversary tactics, techniques, and case studies for ML systems based on real-world observations and demonstrations. Emphasizing the importance of AI system security and implementing appropriate measures can help safeguard AI systems from potential security risk.

Respecting Data Privacy

Data privacy is of the utmost importance when designing an AI system. It is imperative to ensure transparency and accountability in the design of AI systems, including aspects such as:

- Contracts—Sign service agreements that explain the rights and obligations of both service providers and service users.

- Consent—Obtain explicitly stated consent and pay attention to the personal privacy laws in the countries of the target users.

- Personal Information Collection Statement (PICS)—Supply transparent notes that explain how user data is handled and the purpose of the collection.

- Data fit for purpose—Employ deanonymization of personal information collection to the greatest extent possible.

- Useful privacy-enhancing technology (PET) considerations for AI:

- Homomorphic encryption converts data into ciphertext that can be analyzed and worked with as if it were still in its original form. This enables complex mathematical operations on encrypted data without compromising the encryption.

- Differential privacy involves adding noise to data to protect individuals’ privacy. However, this noise can also reduce the utility of the data, making it less accurate or useful for certain types of analysis. This trade-off can be difficult to manage and requires care to balance privacy and utility.

- Federated learning (often called collaborative learning) is a decentralized approach to training ML models. It does not require an exchange of data from client devices to global servers. Instead, raw data on edge devices is used to train the model locally, increasing data privacy.

It is essential to have tools and tests during the development life cycle to ensure that no reidentification is possible.

Responding to AI Data Subject Requests

Many enterprises may overlook this necessity, but it is crucial to establish a mechanism to accept and process individuals’ (AI system data subjects’) requests for reviewing, correcting, and deleting their personal information. These requests must be handled promptly per the requirements of laws such as the EU General Data Protection Regulation (GDPR) and China’s Personal Information Protection Law (PIPL). After responding to a subject’s request, it is also essential to adjust the model to correct or remove the information in the training data set or model. Traceability and controllability are critical, and human oversight must be exercised.

Management of RAI Product Life Cycle

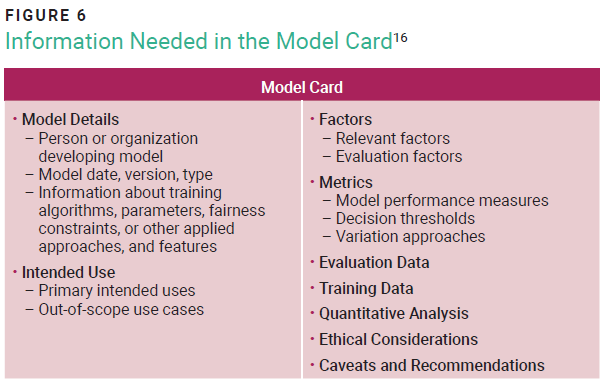

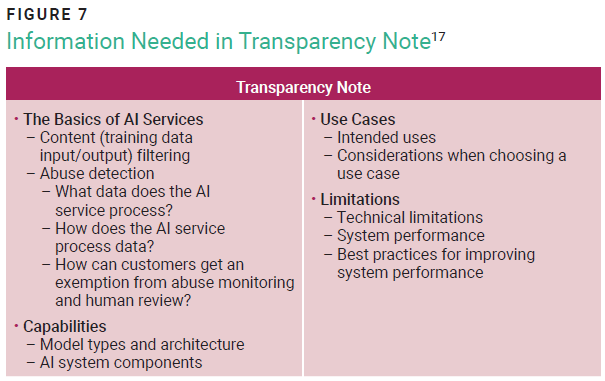

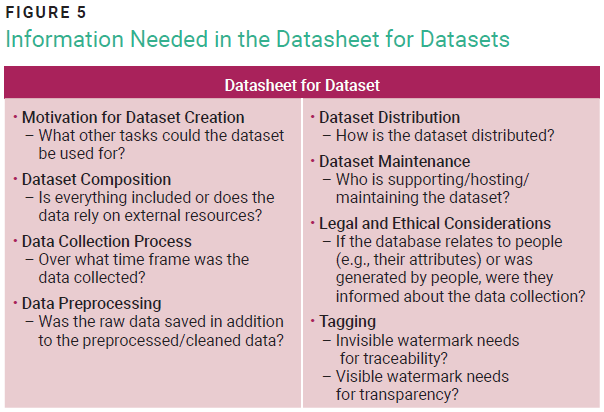

Several elements must be considered to embed RAI into the project life cycle. These include clear RAI values, training data quality, AI system artifacts such as datasheets for datasets (figure 5) and model cards (figure 6), model testing, transparency notes (figure 7),15 and harm mitigation. Ethical considerations such as fairness, accountability, and transparency should guide the development and deployment of AI systems.

The culmination of this exploration emphasizes the significance of AI governance for enterprises to embrace technological advancements and harness tangible benefits from AI in a secure and accountable manner.

By embracing AI governance, organizations can mitigate risk, foster digital trust, comply with regulatory standards, and capitalize on AI’s immense value.Conclusion

The escalating integration of AI technologies within enterprises necessitates a proactive and strategic approach to governance. Establishing a secure AI governance framework is imperative for enterprises to navigate the complexities of AI risk and harness the transformative potential of AI responsibly and transparently. By embracing AI governance, organizations can mitigate risk, foster digital trust, comply with regulatory standards, and capitalize on AI’s immense value. Enterprises must prioritize developing and implementing AI governance frameworks to safeguard against risk and seize the myriad opportunities that AI presents in the contemporary digital landscape.

Endnotes

1 Chui, M.; Yee, L..; et al.; The State of AI in 2023: Generative AI’s Breakout Year, McKinsey & Company, 1 August 2023, https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-in-2023-generative-AIs-breakout-year

2 Parikh, N.; “Understanding Bias In AI-Enabled Hiring,” Forbes, 21 October 2021, https://www.forbes.com/sites/forbeshumanresourcescouncil/2021/10/14/understanding-bias-in-ai-enabled-hiring

3 Andrew, T.; “OpenAI Says a Bug Leaked Sensitive ChatGPT User Data,” Engadget, 25 March 2023

4 Li, M.; “To Build Less-Biased AI, Hire a More- Diverse Team,” Harvard Business Review, 26 October 2020, https://hbr.org/2020/10/to-build-less-biased-ai-hire-a-more-diverse-team

5 Sartori, L., Theodorou, A; “ A Sociotechnical Perspective for the Future of AI: Narratives, Inequalities, and Human Control,” Ethics and Information Technology, vol. 4, 2022, https://doi.org/10.1007/s10676-022-09624-3

6 Microsoft, “Microsoft Responsible AI Impact Assessment Template,” June 2022, https://blogs.microsoft.com/wp-content/uploads/prod/sites/5/2022/06/Microsoft-RAI-Impact-Assessment-Template.pdf

7 Bjork, A.; Ojanen, A.; et al.; “An Assessment Framework for Non-discriminatory AI,” Demos Helsinki, 2022, https://demoshelsinki.fi/julkaisut/an-assessment-framework-for-non-discriminatory-ai

8 High-Level Expert Group on AI, “Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-Assessment,” European Commission, European Union, July 2020, https://digital-strategy.ec.europa.eu/en/library/assessment-list-trustworthy-artificial-intelligence-altai-self-assessment

9 AI Verify Foundation, “AI Verify,” https://aiverifyfoundation.sg/what-is-ai-verify/

10 Holistic AI, “Holistic AI Audits,” OECD.AI, 27 March 2023, https://oecd.ai/en/catalogue/tools/holistic-ai-audits

11 OECD.AI, “Catalogue of Tools & Metrics for Trustworthy AI,” https://oecd.ai/en/catalogue/tools

12 Google Cloud, “Introduction to Vertex Explainable AI,” https://cloud.google.com/vertex-ai/docs/explainable-ai

13 Kumar, R.S.S.; “AI Security Risk Assessment Using Counterfit,” Microsoft Security Blog, 3 May 2021, https://www.microsoft.com/en-us/security/blog/2021/05/03/ai-security-risk-assessment-using-counterfit/

14 Rauschmayr, N.; Aydore, S.; et al.; “Detect Adversarial Inputs Using Amazon SageMaker Model Monitor and Amazon SageMaker Debugger,” AWS Machine Learning Blog, 5 April 2022, https://aws.amazon.com/blogs/machine-learning/detect-adversarial-inputs-using-amazon-sagemaker-model-monitor-and-amazon-sagemaker-debugger/

15 Microsoft, “Transparency Note for Azure OpenAI Service,” 4 February 2024, https://learn.microsoft.com/en-us/legal/cognitive-services/openai/transparency-note?tabs=text

16 Mitchell, M.; Wu, S.; et al.; “Model Cards for Model Reporting,” Association for Computing Machinery, 2019, https://doi.org/10.1145/3287560.3287596

17 Op cit Microsoft

CAROL LEE | CISM, CRISC, CDPSE, C|CISO, CCSP, CEH, CIPM, CSSLP

Is the head of cybersecurity at a Hong Kong-listed company. She leads the enterprise-wide cybersecurity and privacy program to support cloud-first and enterprise digital transformation strategies. She was awarded a Global 100 Certified Ethical Hacker Hall of Fame award and the Hong Kong Cyber Security Professionals Award in recognition of her determination and commitment to assuring the safety of the cyberworld.

TERENCE LAW | CISA, CFA, CISSP, CPA, CERTIFIED BANKER

Is the director of emerging technologies of the ISACA China Hong Kong Chapter. He is the head of innovation and data for the internal audit department of a leading financial institution. With more than 20 years of experience in auditing, cybersecurity, and emerging technologies, he is passionate about giving back to the community and volunteers his time and expertise to promote the adoption of industry-leading practices.

TOM HUANG | CISA, PMP, PRINCE2

Is the head of technology and operational risk management at Livibank. He has extensive experience in the financial services industry, with expertise in risk management in technology, cybersecurity, data, and the cloud. He leverages technologies to develop innovative and safe solutions that enhance customer experience in day-to-day life. He participates in industry forums and is always happy to share insights with the community.

LANIS LAM | CISA, AWS (SAP), CPA

Is a partner in the technology consulting practice of KPMG China. She is a leader in the field of technology risk management with more than 17 years of experience. Her expertise spans across areas such as artificial intelligence, cloud computing, third-party risk management, cybersecurity, and system resilience. She is passionate about discussing and sharing ideas about emerging technologies.