Machine learning (ML) is becoming a crucial asset in the field of auditing. It can enhance the efficiency of financial auditors, internal auditors, forensic auditors, and tax auditors in managing substantial amounts of data. Furthermore, ML can aid compliance auditors in verifying adherence to regulations and support information systems auditors in analyzing intricate datasets. ML has the capacity to greatly enhance the efficiency and effectiveness of audits in several fields of expertise. It is crucial for auditors to comprehend the integration of ML into the audit process, its implications for professionals in the field (particularly external auditors who offer impartial verification), and the potential obstacles auditors may encounter while implementing it.

What Is Machine Learning?

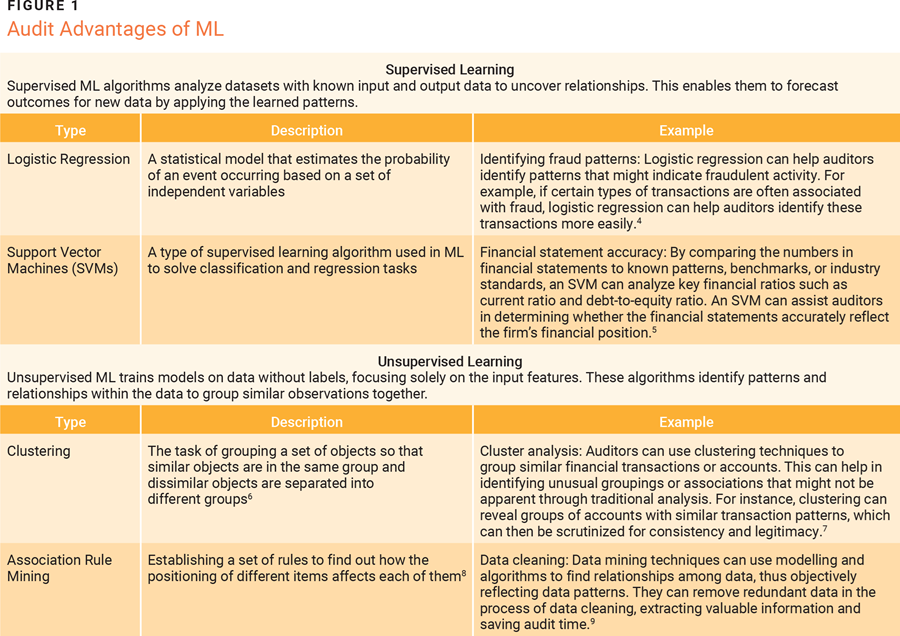

Arthur Samuel, an artificial intelligence (AI) pioneer, defined ML as the “field of study that gives computers the ability to learn without being explicitly programmed.”1 It involves creating smart models that can make predictions or sort items without human intervention. These models learn from huge amounts of data, spotting patterns and links by themselves. ML can be split into two main groups: supervised and unsupervised learning. As defined by IBM, supervised learning is an ML approach defined by its use of labeled datasets that are designed to train algorithms to classify data or predict outcomes.2 This means there are set inputs with known results. Unsupervised learning involves data that does not have labels. This model looks for patterns and connections without any specific guidance.3

How Can ML Benefit Auditors?

ML has expanded the range of options available for conducting an audit. It improves conventional auditing methods by utilizing sophisticated computer algorithms and analytics to extract more comprehensive information from extensive datasets. This enhances the efficiency of auditing processes and outcomes, while also guaranteeing the preservation of audit quality.

Figure 1 describes the various categories of ML and illustrates their potential advantages in the context of an audit.

Operational Efficiencies

ML automates data processing tasks, allowing auditors to analyze large datasets more efficiently. Instead of relying on sampling methods, auditors can analyze the full population of datasets. ML techniques enable auditors to detect irregularities and unusual patterns within records, whereas traditional data analytics tools facilitate the real-time visualization and examination of extensive data collections.10 Auditors frequently manage large amounts of both structured and unstructured data from diverse sources, including financial systems, enterprise resource planning (ERP) systems, and external databases. ML algorithms, such as neural networks and decision trees, offer enhanced efficiency and accuracy in analyzing these datasets compared to traditional statistical approaches.

ML algorithms can efficiently automate multiple regular audit tasks, including extracting data from financial statements, invoices, and other documents, as well as performing data input control, categorization, and reconciliation. By automating these procedures, auditors can greatly diminish the requirement for manual work and the time spent on administrative tasks, allowing them to focus on more crucial activities such as analysis and decision making. Additionally, this reduces the possibility of human error in audits by automating monotonous processes and data processing. “ML algorithms can detect patterns, make predictions, and improve audit quality by reducing manual efforts and minimizing errors associated with manual processes.”11

Predictive Accuracy

Fraud detection is an essential component of auditing, and ML provides strong methods for improving fraud detection capabilities. A study has demonstrated the potential of utilizing ML techniques to identify fraudulent actions by evaluating extensive amounts of transactional data.12 ML algorithms can analyze transactional data, user behaviors, and other relevant factors to identify anomalies and suspicious patterns that may indicate fraudulent activity.

Various types of models are utilized in different contexts. Take, for example, the benefits of supervised learning at a commercial level in the context of healthcare insurance claims. Supervised learning models can be used to predict the frequency of future claims based on historical data. They can detect fraudulent claims and segment customers based on their risk profiles. A study, “Fraud Detection in Healthcare Insurance Claims Using Machine Learning,” found that logistic regression resulted in an accuracy of 80.36%, precision of 97.62%, recall of 80.39%, an F1 score of 88.17% and a specificity of 80%. Another study conducted using MATLAB demonstrated that the support vector machine method is capable of precisely recognizing financial statements. It showed an error rate of less than 10% and a recognition accuracy of more than 90%.13

ML Risk and Audit Processes

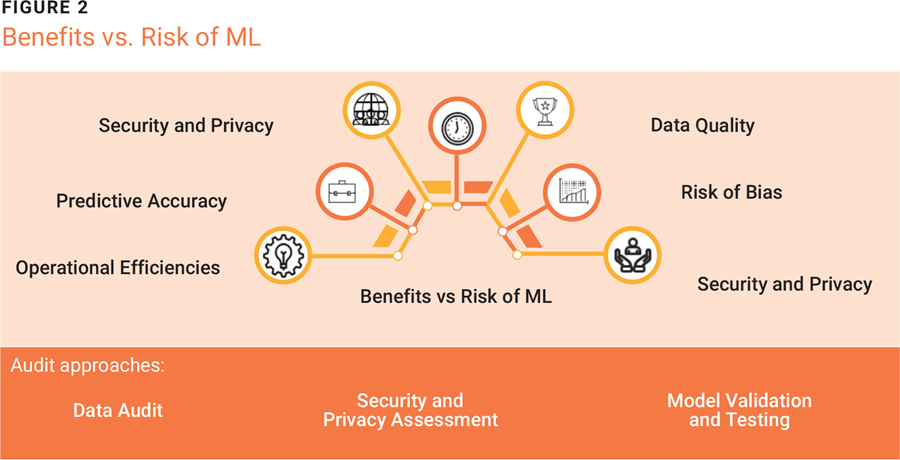

ML can be used to enhance audit processes in several ways, proving a valuable tool for increasing efficiency. However, the introduction of ML into audit presents risk that must be addressed (figure 2).

Data Quality Risk

ML systems rely on data—if the data is not clean, the models will not perform well. This is consistent with the proverbial phrase, “garbage in, garbage out,” or GIGO, which means that the quality of the system outputs is directly dependent on the quality of the inputs. In the realm of ML, the GIGO principle emphasizes the importance of high-quality data for achieving accurate and reliable results.

Security and Privacy Risk

ML systems are essentially machines. Although intended for predictive capabilities, the core architecture of the systems is susceptible to attacks. Cyberattacks may be conducted to reverse engineer a model, that is, to manipulate the data patterns on which the model was trained to create an adverse outcome and generate inaccurate data. As a result, poor decisions could be made based on inaccurate financial reports, leading to business inefficiencies and ultimately lost revenue and reputational damage.

The Risk of Bias

Depending on the nature of the data input, the models created may unknowingly encourage bias. ML models are often used for fraud detection. These models learn from historical data, so if the training data is biased toward certain patterns observed in past fraudulent activities, the model may unfairly target transactions that fit those patterns but are legitimate. Push bias can emerge at various stages, including data collection, preprocessing, and model training.

In this context, the term “push bias” refers to the reinforcement of preexisting biases in the data used to train ML models. Bias occurs when models learn from skewed or unrepresentative data and then use that bias to make decisions. In fraud detection, for example, if a model is primarily trained on electronic transactions, it may mistake cash transactions for fraudulent anomalies simply because they are less common. This biased decision making has an impact on audit processes by increasing false positives, overburdening fraud investigation teams, and potentially broadening the audit scope to address inefficiencies caused by biased outcomes. To mitigate these effects, auditors must ensure that diverse data is used in training and that models are regularly updated to reflect new transaction patterns. Unchecked, biased models can have serious consequences, including legal and reputational risk for financial institutions.

For example, if an ML model in the banking sector is primarily trained on electronic transaction data and has minimal exposure to cash transactions, it may exhibit a bias by categorizing cash transactions as anomalies due to their infrequency in the training dataset. This may lead to an increased incidence of false positives in cash transactions, while potentially overlooking fraudulent electronic transactions that do not conform to the patterns observed in the training data. To alleviate such biases, auditors must guarantee that the training data is extensive and encompasses a diverse range of transaction types. Furthermore, continuous monitoring and updating of ML models is essential to accommodate emerging transaction patterns and mitigate biases that may influence audit results.

Financial institutions may encounter litigation, reputational harm, and monetary sanctions if their AI systems are determined to exhibit bias. This may arise from algorithmic decisions that unintentionally discriminate against consumers. Biased ML models can result in operational inefficiencies. Excessively categorizing legitimate transactions as fraudulent may escalate the workload for fraud investigation teams, thereby causing audit scope expansion.

Audit Approaches

The complex and dynamic characteristics of ML systems arise from their dependence on extensive datasets and sophisticated algorithms that can adapt over time. This makes conventional auditing methods, typically static and manual, less effective. The International Organization of Supreme Audit Institutions (INTOSAI) emphasizes that ML systems pose risk including data security concerns, potential biases in decision making, and the necessity for rigorous project management and documentation practices.14

Auditors must adopt new techniques and frameworks specifically designed for ML systems to tackle these challenges. For example, ISACA® emphasizes the importance of transparency, accountability, and a thorough understanding of the ML life cycle.15 Auditors are encouraged to focus on data quality, model development processes, and the potential biases that could be embedded in ML algorithms.

A proposed pragmatic auditing approach involves developing an ML life cycle model that emphasizes documentation, accountability, and quality assurance.16 This approach is crucial for aligning auditors with organizations and ensuring that the audits they conduct are comprehensive and effective.

Adapting auditing approaches to effectively evaluate ML systems involves understanding the unique risk and complexities these systems present, leveraging new auditing frameworks and guidelines, and emphasizing continuous documentation and accountability throughout the ML life cycle. This shift is essential for auditors to keep pace with advancements in AI and ML technologies.

Data Audit

Auditors need to ensure that the data used to train and operate ML models is reliable, unbiased, compliant with regulatory requirements, and high quality. This can be done using the following data audit approach:

- Ensure that the data supplied for ML modeling is precise, comprehensive, and uniform. Attention must be paid to the completeness and accuracy of information, including the reliability of the data source, to ensure that no discrepancies exist between the data source and the AI model. Access and quality controls must be instituted to preserve data integrity within the AI model, and mechanisms should be established to regulate the end-user output of information from the model to prevent any manipulations prior to its application in decision making and judgments.

- Verify that the ML models perform as expected based on the quality of the data used for training and evaluation.

- Ensure that the methods of data collection, processing, and utilization within the ML system are transparent and adhere to regulatory standards.

Security and Privacy Assessment

ML tools are powerful, but they often rely on sensitive data—for example, financial information or personal medical records. The ML model should be secure and should not be exposed to any vulnerability that compromises privacy. The security and privacy assessment involves examining how well a system protects data and ensures privacy. The objectives of this approach include:

- Verifying that proper controls are in place to restrict access to sensitive data and ML models to authorized personnel or systems

- Ensuring that the ML system adheres to relevant security and privacy regulations, including the EU General Data Protection Regulation (GDPR), to mitigate legal and reputational risk

- Evaluating the encryption of data during storage, transmission, or processing to safeguard it from interception or unauthorized access

Model Validation and Testing

The model validation and testing approach examines how well the ML models understand and predict outcomes by testing them with different examples. The purpose of this approach is to ensure that the models give accurate, unbiased answers. The objectives of this approach include:

- Evaluating the correctness and precision of predictions made by ML models against known outcomes

- Assessing the performance of ML models under various conditions (such as noisy or incomplete data) to ensure reliability in real-world scenarios

- Investigating the types and sources of errors made by ML models to understand their limitations and potential areas for improvement

Conclusion

The integration of ML into auditing practices enables auditors to enhance the accuracy, speed, and quality of audits. By leveraging ML algorithms, audit teams can move beyond traditional representative sampling techniques and review entire populations for anomalies. This shift allows for more directed and intentional testing. Moreover, ML models can learn from auditors’ conclusions and apply similar logic to other items with similar characteristics. While ML technology for auditing is still in its infancy, larger organizations are actively exploring its potential. However, challenges remain, including addressing interpretability constraints and ensuring that ML tools reach their full capabilities. The future of auditing lies in harnessing the power of ML to improve decision support and streamline processes, ultimately enhancing the profession’s effectiveness and efficiency.

Endnotes

1 Gupta, S.; “How to Learn Machine Learning From Scratch,” Springboard, 11 March 2022,

2 IBM, “What Is Supervised Learning?,”

3 Delua, J.; “Supervised vs Unsupervised Learning: What’s the Difference?,” IBM, 12 March 2021

4 Alenzi, H.; Aljehane, N.; “Fraud Detection in Credit Cards Using Logistic Regression,” International Journal of Advanced Computer Science and Applications (IJACSA), vol. 11, iss. 12, 2020

5 Gupta, R.; Gill, N.; “Financial Statement Fraud Detection Using Text Mining,” International Journal of Advanced Computer Science and Applications (IJACSA), vol. 3, iss. 12, 2012

6 Shalev-Shwartz, S.; Ben-David, S.; Understanding Machine Learning: From Theory to Algorithms, Cambridge University Press, UK, 2014

7 Byrnes, P.; “Automated Clustering for Data Analytics,” Journal of Emerging Technologies in Accounting, vol. 16, iss. 2, 2019

8 Chauhan, A.; “Association Rule Mining – Concept and Implementation,” Analytics Vidhya, 26 April 2020

9 Guo, J.; Liu, S.; “Research on the Application of Data Mining Techniques in the Audit Process,” Cyber Security Intelligence and Analytics, Springer, Switzerland, 2021

10 Samweez, A.; “The Role of Artificial Intelligence (AI) and Data Analytics in Auditing,” Crowe, 14 March 2023

11 Dickey, G.; Blanke, S.; Seaton, L.; “Machine Learning in Auditing,” The CPA Journal, June 2019

12 Ali, A.; Razak, S.A.; Othman, S.H.; et al.; “Financial Fraud Detection Based on Machine Learning: A Systematic Literature Review,” Applied Sciences, vol. 12, iss. 19, 2022,

13 Nabrawi, E.; Alanazi, A.; “Fraud Detection in Healthcare Insurance Claims Using Machine Learning,” Risks, vol. 11, iss. 9, 2023

14 Li, H.; Yazdi, M.; Nedjati, A.; et al.; “Harnessing AI for Project Risk Management: A Paradigm Shift,” 8 March 2024

15 Prasad, V.; “AI Algorithm Audits: Key Control Considerations,” ISACA®, 2 August 2024

16 Benbouzid, D.; Plociennik, C.; Lucaj, L.; et al.; “Pragmatic Auditing: A Pilot-Driven Approach for Auditing Machine Learning Systems,” arXiv, 21 May 2024

KGODISO CHILOANE

Is a senior IT auditor with a focus on IT controls and application controls in both internal and external audits. He is keen to explore the influence of next-generation technologies within the audit space, with the aim of understanding how machine learning (ML) can improve the audit process.

FATIH ISIK

Is an experienced professional with expertise in IT risk consulting and IT auditing. He is particularly interested in auditing ML and artificial intelligence (AI) systems.

EUGENE ZITA

Is a junior IT auditor with a particular interest in exploring the transformative potential of ML and AI in the auditing field.