Large language models (LLMs) have shown exceptional capabilities in understanding and generating human-like text, underpinning major advances in natural language processing (NLP). The efficacy of these models in specific tasks often hinges on the construction of the input prompts—a practice known as prompt engineering. Prompt engineering involves the meticulous crafting of input queries or commands that guide LLMs to generate desired outputs. This practice requires a deep understanding of the model’s architecture and how it processes and interprets various natural language inputs. There is value in dissecting the methodologies behind prompt engineering, evaluating its impact on the effectiveness of LLMs, and exploring the broader implications for artificial intelligence (AI) development. By mastering the art of prompt engineering, IT professionals can unlock the full potential of LLMs, transforming them into powerful tools that drive innovation, enhance productivity, and fortify security within organizations.

Prompt Engineering Techniques

The art and science of crafting prompts has evolved into a multifaceted discipline with a variety of techniques, each serving distinct purposes and catering to different complexities of tasks. The absence of a one-size-fits-all approach in prompt engineering stems from the inherent diversity of tasks that LLMs are expected to perform, ranging from simple question-answering to intricate creative writing. Understanding the breadth of these techniques empowers developers and users to harness the full potential of LLMs, tailoring their interactions to elicit the most accurate, relevant, and insightful responses.

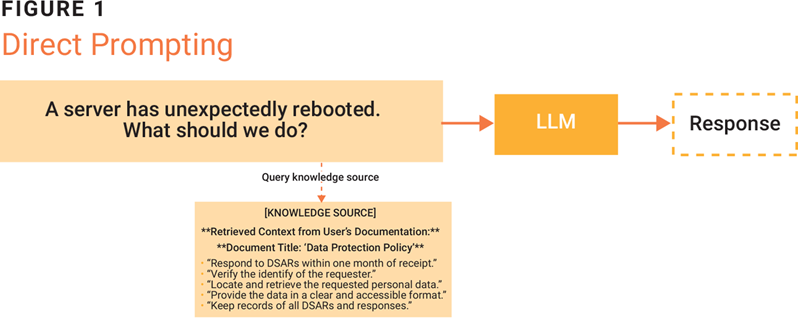

Direct Prompting

Direct prompting is the most straightforward technique, wherein the model is provided a direct question or instruction (figure 1). Although this method is simple and easy to implement, its effectiveness can be somewhat limited for more complex tasks. These tasks might require additional context that direct prompts cannot provide.

Direct prompting requires only two steps:

- Prompt creation—Formulate a clear and concise prompt, directly stating the desired task or question.

- Text generation—The model processes the prompt and generates a response, relying on its internal knowledge and learned patterns to produce the output.

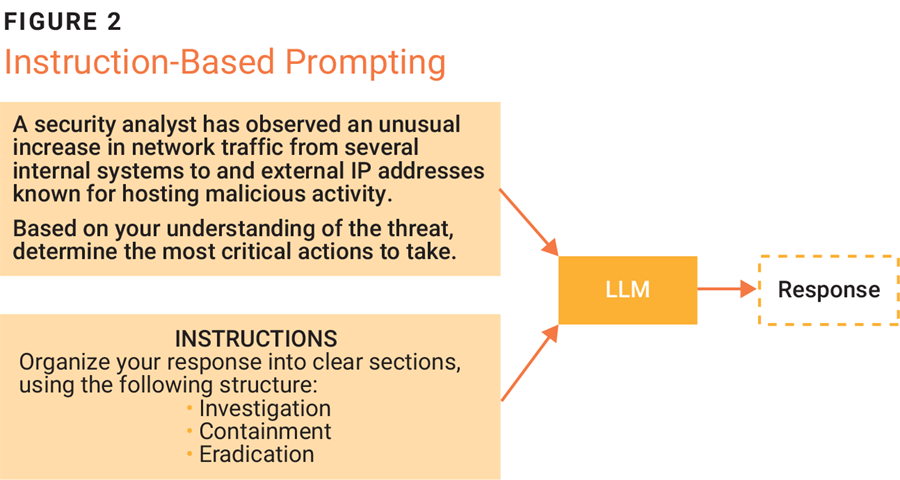

Instruction-Based Prompting

With instruction-based prompting, the model is given explicit instructions regarding the format, style, or content of the desired response (figure 2). Setting clear expectations can lead to more focused and relevant outputs. This method helps achieve precise responses that are more closely aligned with user needs.

Instruction-based prompting involves several steps:

- Task instruction—Formulate a clear and concise instruction, specifying the task the model should perform (e.g., “summarize the following article,” “translate this sentence into French,” “write a poem about nature”).

- Additional guidelines (optional)—If the complexity of the task requires it, provide additional guidelines to refine the model’s output (e.g., “keep the summary under 100 words,” “use formal language in the translation,” “include rhyming in the poem”).

- Prompt construction—Combine the task instruction and any additional guidelines to form a complete prompt.

- Text generation—The model then processes the prompt and generates a response that attempts to follow the instructions.

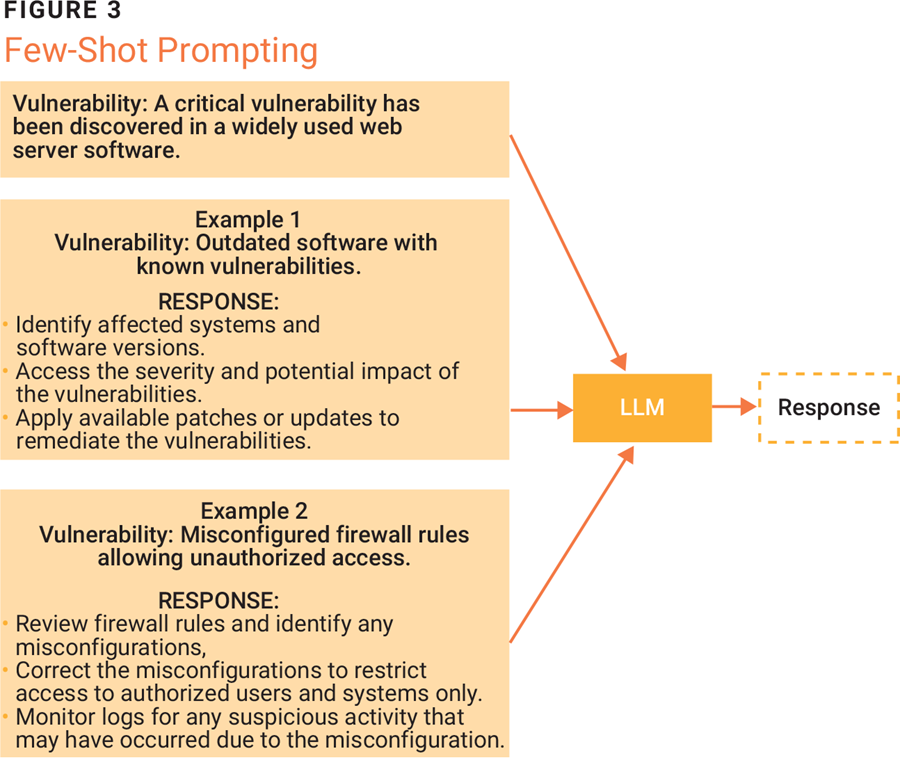

Few-Shot Prompting

Few-shot prompting takes advantage of the LLM’s ability to learn from a limited number of examples. By providing it with several demonstrations of input-output pairs, the model is trained to generalize this information for new prompts (figure 3). This technique is particularly useful when explicit training data is limited but a high level of adaptation is required.

Four actions are required for few-shot prompting:

- Task definition—Clearly define the task the model should perform, such as summarizing a text, translating a sentence, or answering a question.

- Example selection—Carefully select a few (typically two to five) high-quality examples that demonstrate the desired input-output behavior. These examples should be relevant to the task and diverse enough to meet the task’s requirements.

- Prompt construction—Create a prompt that includes the task definition, the selected examples, and the new input for which the model should generate a response. The prompt should be structured in a way that is easy for the model to understand and follow.

- Text generation—The model processes the prompt, using the examples as a guide to understand the task and generate a response for the new input. The quality of the response often depends on the quality and relevance of the examples provided.

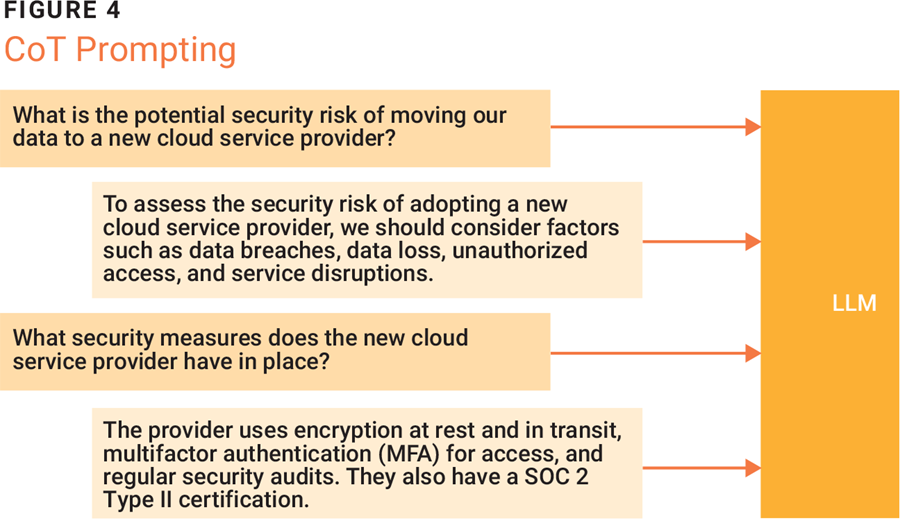

CoT Prompting

Chain-of-thought (CoT) prompting is a technique used to enhance the reasoning abilities of LLMs.1 It involves breaking down a complex problem into smaller intermediate steps and guiding the model through a chain of thought to arrive at the solution. This mimics the way humans approach problem solving, making the reasoning process more transparent and improving the accuracy of the model’s output (figure 4).

To perform CoT prompting, follow these three steps:

- Demonstration—Provide the model with examples that explicitly lay out the problem-solving process, step by step. These demonstrations serve as a guide for the model, allowing it to follow a similar reasoning pattern.

- Prompting—Encourage the model to generate its own chain of thought when presented with a new problem, articulating the steps it takes to arrive at the answer. This can be done by including phrases such as “think step by step” in the prompt.

- Evaluation—Evaluate the model’s output based not only on the final answer but also on the quality and coherence of the reasoning steps articulated in the chain of thought.

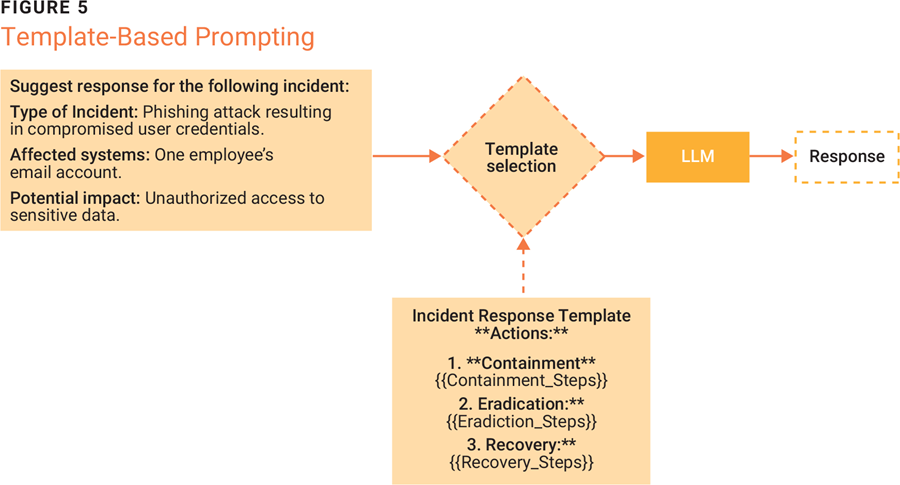

Template-Based Prompting

Using templates provides a structured format for prompts, which ensures consistency across different queries and guides the model to produce the desired outputs (figure 5). This approach is especially valuable when specific information needs to be systematically extracted from or inserted into a response. It involves creating a structured format, or template, that guides the model’s output, making it easier to control and customize the generated text.

Three steps are involved in template-based prompting:

- Template creation—Design a template with specific placeholders or variables that will be replaced with dynamic content during text generation. These placeholders can represent specific information such as names, dates, or any relevant details.

- Input data—Provide the model with input data, which includes the values to be inserted into the placeholders of the template. This input can come from a variety of sources, including user input, structured data, or even other language models.

- Text generation—The model uses the template as a guide, inserting the input data into the appropriate placeholders and generating text that conforms to the structure of the template. This process can involve sophisticated language generation techniques, such as those employed by GPT-3 or other advanced models.

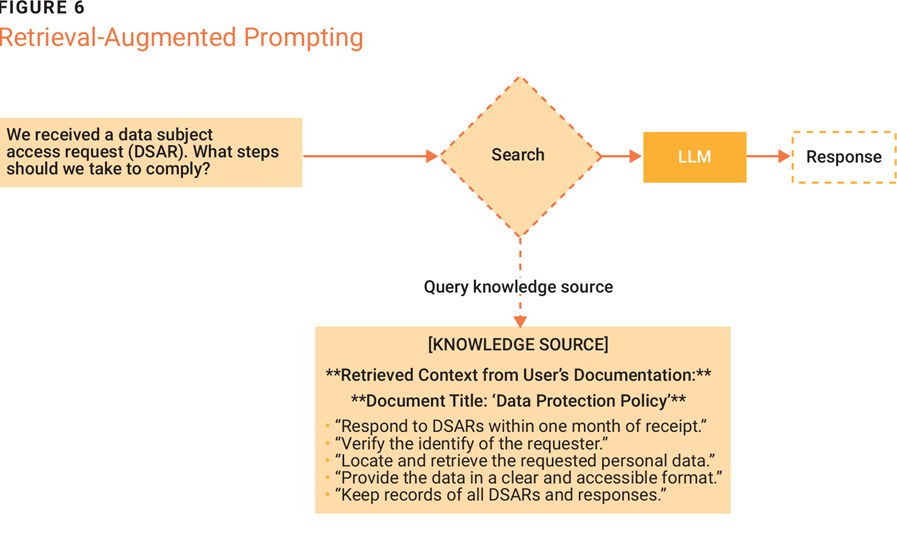

RAP

Retrieval-augmented prompting (RAP) is a technique used to enhance the capabilities of LLMs by combining their natural language–generation capacity with the ability to retrieve relevant information from external knowledge sources (figure 6).2

To perform RAP, four actions should be taken:

- Query formulation—Analyze the query to determine whether the LLM’s internal knowledge is sufficient to provide an accurate answer. If not, transform the query into a search query to retrieve relevant information from an external source.

- Information retrieval—Use the search query to access a knowledge base, which can be a structured database, a collection of documents, or even the Internet. The most relevant information is retrieved based on the query.

- Prompt augmentation—Integrate the retrieved information into the original query, creating an augmented prompt that provides the model with additional context and knowledge.

- Text generation—The model processes the augmented prompt, utilizing both its internal knowledge and the retrieved information to generate a more accurate and informative response.

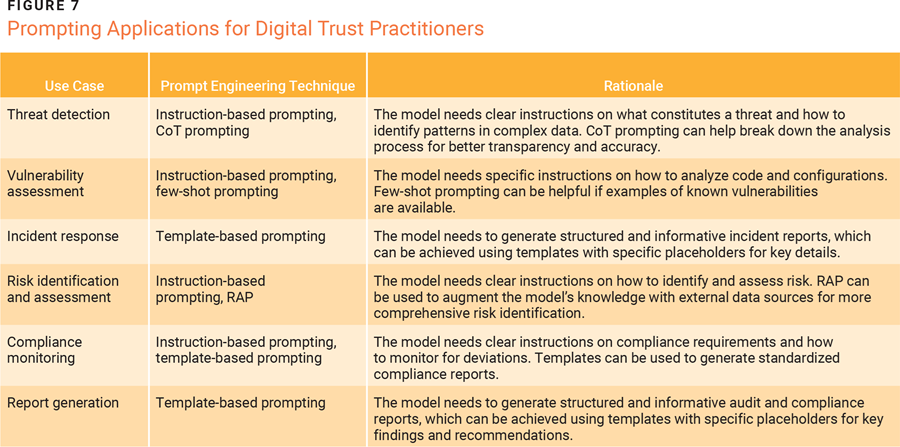

Applications

The strategic deployment of prompt engineering can significantly enhance the capabilities of LLMs in the fields of security, risk, audit, and governance (figure 7).

Challenges

While prompt engineering offers immense potential, it is not without its hurdles. The intricacies of crafting effective prompts present several challenges that warrant careful navigation.

Design Complexity

Crafting effective prompts for LLMs requires a mix of technical skills and a nuanced understanding of the specific tasks and potential biases inherent in the model’s training data. Prompt engineers must carefully tailor prompts for each task, ranging from simple data queries to complex creative content generation, while considering any biases that could affect the model’s output.

Ethical Considerations

Ethical challenges in prompt engineering are significant, especially in sensitive areas such as political content and mental health applications. It is crucial to design prompts that do not perpetuate biases or enable manipulative outcomes. Maintaining high ethical standards ensures that AI’s contributions are both positive and fair.

Technical Limitations

The effectiveness of LLMs relies heavily on the quality and structure of prompts, which can limit the model’s flexibility and adaptability. This dependence necessitates continuous refinement of prompts and ongoing research into more robust model architectures that are less sensitive to prompt nuances and can generalize across a broader range of tasks.

In addressing these challenges, prompt engineering must balance complex design requirements, ethical integrity, and technical advancements to fully and responsibly leverage AI’s potential.

Future Directions

The trajectory of prompt engineering points toward a future where its capabilities are further amplified, and its accessibility broadened. The integration of automation, cross-disciplinary insights, and the establishment of ethical guidelines are poised to shape the next phase of this dynamic field.

Automation in Prompt Engineering

The future of prompt engineering may greatly benefit from the integration of AI-driven tools designed to automate the creation and refinement of prompts. Such tools could use machine learning algorithms to analyze the effectiveness of different prompts in real time, suggesting modifications or entirely new prompts based on desired outcomes. This approach not only enhances the accessibility of prompt engineering for nonexperts but also increases its effectiveness by enabling more rapid iterations and optimizations. Automated systems have the potential to learn from each interaction, continuously improving their suggestions and adapting to new types of tasks and domains, thus democratizing the use of sophisticated LLMs across industries.

Cross-Disciplinary Approaches

In the effort to refine prompt engineering, incorporating cross-disciplinary insights from linguistics, psychology, and cognitive science could be transformative. By understanding how humans process information and language, developers can design prompts that are more naturally aligned with human reasoning patterns, making interactions with LLMs more intuitive and effective. This might involve studying linguistic structures that guide human thought processes or applying psychological principles to understand how different phrasings can affect the interpretive responses of AI. Such an approach could not only improve the user experience but also enhance the robustness and reliability of AI responses in complex scenarios.

Policy and Ethical Guidelines

As prompt engineering becomes more sophisticated, the establishment of comprehensive policy and ethical guidelines will be crucial. These guidelines should govern the design and use of prompts to prevent misuse and ensure that LLM outputs do not perpetuate bias or misinformation. Standards should address transparency in how prompts are constructed and their potential impacts, ensuring that stakeholders are aware of how AI influences the generated content. Additionally, these guidelines should encourage the ethical use of AI, promoting fairness, accountability, and respect for privacy. By setting a framework for ethical prompt engineering, the AI community can foster trust and facilitate more responsible development and deployment of these powerful technologies.

Conclusion

Prompt engineering is crucial for maximizing the effectiveness of LLMs and has particular relevance for digital trust professionals working in cybersecurity, risk, audit, and governance. As AI becomes further integrated into these domains, the ability to precisely guide AI responses through advanced prompt engineering is essential. This allows professionals to leverage AI for tasks such as vulnerability assessments, risk analysis, audit support, and compliance checks, while maintaining ethical guidelines and protecting sensitive information.

The ongoing development of prompt engineering techniques will be key in realizing AI’s transformative potential while managing its challenges. This involves improving response accuracy and efficiency, as well as safeguarding against bias and misuse. As AI’s role in society grows, responsible management of its influence is paramount.

Improving prompt engineering is not merely a technical endeavor but a societal imperative. Ensuring that AI supports human capabilities and aligns with societal norms requires collaboration among technologists, ethicists, policymakers, and users. This collective effort will help shape AI’s development, making it a positive force in our collective future.

Endnotes

1 Wang, X.; et al.; “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models,” 36th Conference on Neural Information Processing Systems, January 2023,

2 Chen, X.; Li, L.; et al.; “Decoupling Knowledge from Memorization: Retrieval-Augmented Prompt Learning,” 36th Conference on Neural Information Processing Systems, September 2023,

LALIT CHOUREY

Is a seasoned software engineer with more than a decade of experience developing scalable back-end services and distributed systems, specializing in artificial intelligence (AI) infrastructure for large language model (LLM) training. Currently a software engineer at Meta Platforms, Chourey leads a team in architecting robust systems for machine learning (ML) training. Previously, at Microsoft, he led the development of several large-scale cloud services on Azure.