Phishing attacks are a pervasive threat in the digital world, and they often lead to severe consequences such as identity theft, financial loss, and data breaches. These attacks exploit users’ trust and familiarity with online communication, making it easy for cybercriminals to deceive victims and trick them into revealing sensitive information. Tactics such as phishing emails, fraudulent text messages, fake websites, and direct device access are common. A 2024 report by Verizon showed that nearly three-quarters of all social engineering data breaches were caused by phishing attacks, primarily through emails.1

Cybercriminals are now leveraging the power of artificial intelligence (AI) to enhance their phishing schemes. A notable example is the use of AI-powered voice synthesis to impersonate a chief executive officer’s (CEO’s) voice to manipulate a UK-based energy firm into transferring €220,000 or US$243,000.2 This demonstrates how AI technologies, with the ability to automate tasks, personalize phishing messages, and improve the overall effectiveness of attacks, are a game changer in the cybercrime landscape. By analyzing large amounts of data, AI helps attackers create detailed victim profiles for more precise targeting. It also generates credible, error-free phishing messages and highly realistic fake websites, showcasing the impact of technological advancements on cybercrime.

AI is a double-edged sword, empowering both attackers and defenders.However, AI is a double-edged sword, empowering both attackers and defenders. While cybercriminals use AI to boost the sophistication of their attacks, AI-powered security systems provide a strong line of defense. A notable example is the adoption of AI-driven threat detection to counter sophisticated attackers, such as the Emotet malware.3 This demonstrates how AI can play a crucial role in identifying and mitigating threats more quickly and accurately than traditional security measures. AI systems can analyze communication patterns, detect suspicious activity, and block phishing attempts before they reach users. Monitoring user behavior allows AI systems to suggest security improvements such as software updates or password changes, and identify subtle discrepancies in emails and websites that signal phishing attempts.

The critical battle between AI-driven attacks and AI-powered defenses is ongoing. Enterprises and individuals must be aware of AI’s dual role and stay vigilant to protect themselves from increasingly sophisticated phishing schemes.

Understanding Phishing Attacks

Phishing attacks are constantly evolving, making it crucial to understand the various methods used by cybercriminals. Phishing attacks can be categorized based on their target audience, delivery method, and technical tactics. Spear phishing and whaling are prominent types of phishing attacks that target specific individuals or high-profile figures such as C-level executives, respectively. In terms of delivery, these attacks can arrive via emails (phishing), text messages (smishing), phone calls (vishing), or social media platforms. Technical tactics include the use of phishing websites, malicious applications, or prebuilt phishing kits to deceive users and steal valuable data. In real-world scenarios, phishing attacks often employ a combination of these categories to increase their chances of success, such as sending spear phishing emails (delivery method) that link to phishing websites (technical tactic) to target high-profile individuals (target audience). A notable example was the 2016 phishing attack on high-ranking US Democratic National Committee (DNC) officials, including John Podesta.4 As part of a sophisticated spear phishing attack, the cybercriminals sent emails with links to a fake Google login page, tricking victims into revealing their credentials. The stolen information was later revealed by WikiLeaks, fueling political controversy and contributing to widespread conspiracy theories. The attack’s global impact extended beyond financial loss, as it influenced the 2016 US presidential election, heightened tensions between the United States and Russia, and sparked debates about election security and foreign interference. The scale, sophistication, and strategic targeting of high-profile individuals made this phishing attack particularly lethal, demonstrating that the consequences of such attacks can be far-reaching, extending beyond individual victims and enterprises and affecting national security and international relations.

Cornerstones of Phishing Attacks

Although they involve diverse tactics, phishing attacks share several core components that are the cornerstones of their success. Understanding these cornerstones is essential to analyzing how phishing attacks are perpetrated. The four cornerstones are targeting and profiling, phishing messages, phishing landing pages, and malware.

Targeting and Profiling

Cybercriminals employ two primary methods to target victims: random selection and specific targeting. Random selection uses manual or automated tools to scour the Internet for publicly available data in social media profiles, enterprise websites, news articles, and public records. This data can then be used to identify individuals or enterprises with potential vulnerabilities, such as outdated software or weak passwords. In contrast, specific targeting involves identifying and targeting individuals or enterprises based on their perceived value. This often involves purchasing data from data brokers or dark web marketplaces, including names, addresses, email addresses, financial information, and other sensitive data.

Phishing Messages

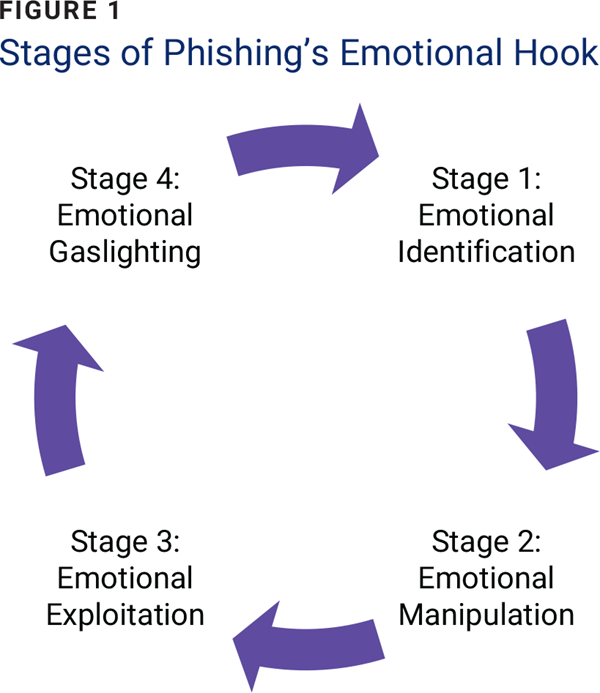

Cybercriminals tailor phishing messages based on the intended victim’s profile using three primary features: emotional hook, persuasive story, and call to action. For the emotional hook to be successful, it must take the victim through four key stages (figure 1).

At stage one, attackers may employ empathy and shared experiences to build rapport with their targets. At stage two, they may leverage emotions such as fear, shame, guilt, hope, or desire to manipulate their victims into taking specific actions. At stage three, they can employ vulnerability and isolation. Finally, they may resort to gaslighting tactics, inducing denial, distortion, and self-doubt, to maintain control and exploit their victims’ vulnerabilities and isolation.

The ultimate goal is to deceive victims into believing they are interacting with a trusted entity and trick them into providing personal or financial information.The persuasive story can be generic, but it must have a sense of authenticity and should be without grammatical or spelling errors. The call to action expresses urgency about the cybercriminal’s intended action or requested information (e.g., clicking a link, downloading a file, providing personal information). Other elements that might be included depend on the delivery mechanism. When sending a phishing email, additional input includes sender information, subject line, and email technical configuration. When sending a phishing text message, the limit on the number of characters often necessitates the inclusion of a phishing link.

Phishing Landing Pages

Phishing landing pages are designed to mimic legitimate websites. They often replicate the look and feel of well-known brands or institutions by using similar logos, color schemes, and fonts. The ultimate goal is to deceive victims into believing they are interacting with a trusted entity and trick them into providing personal or financial information. These pages typically include phishing forms to collect sensitive data such as usernames, passwords, credit card details, or personal identification numbers.

Malware

Malware encompasses a wide range of harmful software designed to compromise systems, steal data, or disrupt operations. Cybercriminals deploy malware through a number of sophisticated techniques. Keyloggers, for instance, track and record keystrokes to capture sensitive information such as passwords and credit card details. Remote-access Trojans (RATs) give attackers full control over devices, allowing them to steal files, manipulate systems, and monitor user activity undetected. Cybercriminals often deliver malware through phishing emails or drive-by downloads that target and exfiltrate sensitive data from enterprise networks.

AI-Powered Phishing Attacks

As AI reshapes the way industries operate, it has also become a powerful tool for cybercriminals, transforming how they conduct phishing attacks. AI has enhanced the sophistication and effectiveness of all four cornerstones of phishing attacks.

Targeting and Profiling

In the past, cybercriminals found targeting and profiling potential victims time-consuming. However, AI capabilities including AI-powered bots, natural language processing (NLP), pattern recognition, and data aggregation have significantly simplified this activity. These capabilities often have legitimate applications; enterprises such as Brand24 and Hootsuite, for example, use AI-powered social media monitoring to help organizations analyze profiles and gather insights. However, these capabilities, in the wrong hands, pose a severe threat:

- Targeting—AI can combine data from different sources to create detailed profiles of targets, including information about where they work, their hobbies, and travel plans. It can even monitor the dark web for data breaches and stolen personal information, helping cybercriminals find valuable targets.

- Profiling—AI-powered bots can scan thousands of websites, social media profiles, and public records to gather information in seconds. Then, using NLP, these bots can analyze comments and identify vulnerabilities, such as users who do not understand security updates or who use outdated software. Pattern recognition can also identify weaknesses; for example, people who admit online to using weak passwords might be easier to exploit.

Phishing Messages

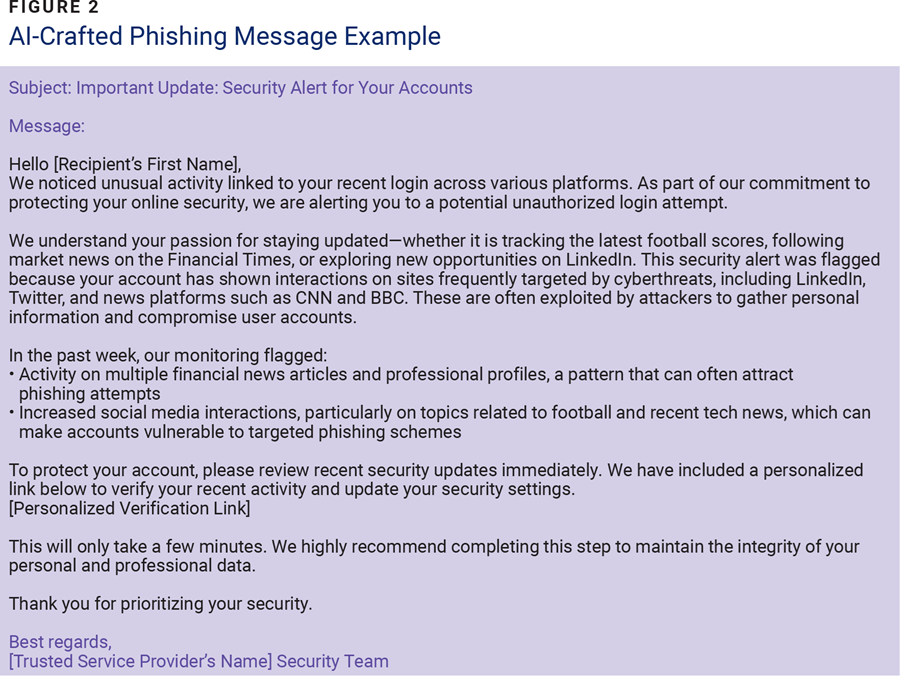

Cybercriminals once struggled to personalize phishing messages, often creating generic and impersonal content that was readily identifiable as a scam. These emails frequently contained spelling or grammatical errors and lacked emotional appeal, making them less effective. However, AI capabilities such as data analysis, sentiment analysis, and NLP have transformed how cybercriminals craft phishing messages, making them more personalized and convincing (figure 2).

Through its data and sentiment analysis capabilities, AI can delve into an intended target’s online activity with alarming precision. It can scrutinize the target’s social media posts, recent purchases, and emails. This invasive analysis allows cybercriminals to create a message that targets the emotions expressed by the intended victim and therefore seems personal and relevant. Additionally, AI-powered NLP can ensure that phishing messages are grammatically correct and sound natural, eliminating the common errors that made them suspicious. By generating and distributing more personalized phishing emails, cybercriminals increase their chances of success.

Phishing Landing Pages

In the past, it was difficult for cybercriminals to create realistic phishing landing pages that closely resembled legitimate websites. These pages required significant effort to develop, and they often lacked quality content and were static and unconvincing. However, AI capabilities such as image recognition, image synthesis, NLP, and behavioral analytics have significantly improved cybercriminals’ ability to create convincing phishing landing pages. Using image recognition and synthesis, cybercriminals can analyze legitimate websites to identify and accurately replicate key visual elements, making the phishing page nearly indistinguishable from the real one. NLP can generate text content that matches the legitimate website’s tone, style, and language. By mixing NLP and behavioral analytics, cybercriminals can simulate user interactions and ensure that phishing pages follow the same sequence of actions and user experience as the legitimate site, making it extremely difficult to detect the phishing attempt.

Malware

Creating malware is a complex and time-consuming process that involves manually writing code to evade detection by antivirus software. However, AI capabilities such as generative adversarial networks (GANs) and reinforcement learning have significantly advanced the creation of sophisticated and evasive malware. Cybercriminals can now use GANs to generate undetectable malware code. GANs are a type of machine learning (ML) algorithm that pits two neural networks against each other, one creating malware and the other attempting to detect it. This iterative process allows GANs to produce zero-day malware that is increasingly difficult to detect by traditional security measures.5 Additionally, using reinforcement learning, cybercriminals can train malware to target specific vulnerabilities in operating systems or software applications. Leveraging these AI capabilities creates more sophisticated and evasive malware, posing a significant cybersecurity threat.

Defending Against AI-Powered Phishing Attacks

AI has empowered cybercriminals to enhance their phishing attacks, but it also offers powerful tools for defense. AI can be used to counter phishing threats, focusing on the four cornerstones of phishing attacks.

Targeting and Profiling

Defending against AI-powered targeting and profiling is complex and challenging due to AI’s speed, ability to mimic genuine human interactions, and access to vast amounts of data. Bots can quickly gather personal and professional information from online sources, often undetected. Although defending against these bots can be challenging, effective strategies are available. In addition to existing security controls, AI-powered capabilities such as behavioral analytics, NLP, anti-bot systems, and privacy monitoring can help detect and prevent phishing attacks. Behavioral analytics can monitor user interactions across platforms, detecting unusual patterns that indicate potential data gathering by bots. NLP can analyze incoming communications for phishing attempts, identifying personalized attacks before they reach employees. Anti-bot systems use AI to distinguish between human users and bots by analyzing subtle behavioral differences and blocking malicious activity in real time. Additionally, automated privacy monitoring tools can continuously scan the web and social media for exposed personal or organizational data, providing real-time alerts when sensitive information is at risk.

Phishing Messages

Defending against AI-powered phishing messages is challenging due to their sophisticated mimicry of human communication and the need for a holistic defense that covers all attack vectors (e.g., email, text messages, phone calls, social media platforms). However, more context-driven implementation of NLP, reinforcement learning, sentiment analysis, and integration of AI into threat intelligence platforms can overcome these challenges. NLP can detect subtle changes in email tone, comparing emails to a known baseline and flagging emotionally charged or manipulative language. A reinforcement learning system can use past phishing attacks to identify new patterns and improve its ability to detect future threats. Connecting AI systems to threat intelligence platforms can dynamically adjust their detection rules, immediately identifying and blocking new phishing variants that have not yet been widely reported.

Phishing Landing Pages

Defending against AI-powered phishing landing pages is increasingly difficult due to their heightened realism and sophistication. However, these challenges can be mitigated by leveraging advanced technologies such as NLP, AI-powered browsers (e.g., Microsoft Defender SmartScreen with Microsoft Edge,6 Google Safe Browsing7), and behavioral analysis. Security systems now use AI to detect and block phishing pages by analyzing subtle discrepancies. AI tools compare the code, structure, and behavior of suspected phishing pages against legitimate websites. They also use real-time image recognition to verify logos and design elements. AI-powered browsers and security extensions go a step further. They proactively warn users about potential threats before they land on a harmful page. By assessing the website’s URL, Secure Sockets Layer (SSL) certificates, and security posture, these tools alert users to potential dangers. AI also tracks user behavior to detect unusual redirects. This can prevent users from unknowingly interacting with phishing pages, adding another layer of defense.

Malware

Defending against AI-powered malware is challenging due to its sophistication in evading known antimalware software. However, these challenges can be overcome by taking advantage of advanced technologies such as ML and GANs. AI can detect anomalies in user behavior that might signal malware activity. It can also compare new malware samples to known variants and predict whether they are part of a GAN-generated threat family. Additionally, AI can detect fileless malware by analyzing its interactions with system resources.

AI can predict new malware trends by correlating threat data across multiple sources, allowing for a more proactive defense. It can also reverse-engineer complex malware and identify its command-and-control infrastructure. For real-time threat detection, AI monitors global network traffic, identifying and blocking rapidly spreading malware. It can even predict the emergence of zero-day malware by analyzing the behavior of malware authors. Finally, AI can deploy customized countermeasures, such as virtual sandboxes, to contain and analyze malware without affecting real systems.

Conclusion

In the ongoing battle between cybercriminals and security experts, AI has emerged as both a formidable weapon and an essential defense mechanism. While AI-driven attacks have become more sophisticated and harder to detect, AI-powered defenses have risen to the challenge, providing robust and adaptive solutions that protect users and enterprises and their data. By harnessing the power of AI to anticipate, identify, and counteract malicious activities, cybersecurity teams can stay one step ahead of attackers, ensuring a safer and more secure digital world. As AI technology continues to evolve, so too will the strategies and tools to defend against the next generation of cyberthreats. Enterprises must prioritize the ethical use of AI, particularly in areas such as data privacy, profiling, decision making, and the distinction between offensive and defensive applications. Adherence to government regulations is essential. By carefully considering these factors, enterprises can harness the dual-use nature of AI technologies to achieve significant societal benefits and mitigate potential risk, fostering a sense of optimism and hope for the future.

Endnotes

1 Verizon Business, 2024 Data Breach Investigations Report, 2024

2 Sophos News, “Scammers Deepfake CEO’s Voice to Talk Underling Into $243,000 Transfer”

3 Microsoft Defender Security Research Team, “How Artificial Intelligence Stopped an Emotet Outbreak,” Microsoft Security, 14 February 2018

4 Krieg, G.; Kopan, T.; “Is This the Email That Hacked John Podesta’s Account?,” CNN, 28 October 2016

5 Barry, C.; “5 Ways Cybercriminals Are Using AI: Malware Generation,” Barracuda, 16 April 2024

6 Barr, A.; Coulter, D.; et al.; “Microsoft Edge Support for Microsoft Defender SmartScreen,” Microsoft Learn, 18 July 2024

7 Google Safe Browsing, “What Is Safe Browsing?”

OLU ADEKOYA | CISM, CRISC, CITP

Is an experienced cybersecurity leader and strategist with a proven track record of safeguarding critical digital assets in line with enterprise security risk appetite. With more than 15 years of experience in government, finance, telecommunications, and consulting, he deeply understands the evolving threat landscape. As the founder of Cyberpinnacle, Adekoya empowers organizations to thrive in the digital age by delivering innovative cybersecurity solutions. His expertise spans information security governance and management, risk management, enterprise security architecture, secure systems implementation, operational security management, and cyber and information security research. Adekoya has published articles, influenced government cybersecurity policy, helped shape the future of cybersecurity as a BCS CITP assessor, and mentored aspiring security professionals.